- Services

Technology Capabilities

Technology Capabilities- Product Strategy & Experience DesignDefine software-driven value chains, create purposeful interactions, and develop new segments and offerings

- Digital Business TransformationAdvance your digital transformation journey.

- Intelligence EngineeringLeverage data and AI to transform products, operations, and outcomes.

- Software Product EngineeringCreate high-value products faster with AI-powered and human-driven engineering.

- Technology ModernizationTackle technology modernization with approaches that reduce risk and maximize impact.

- Embedded Engineering & IT/OT TransformationDevelop embedded software and hardware. Build IoT and IT/OT solutions.

- Industries

- GlobalLogic VelocityAI

- Insights

BlogsGlobalLogicJuly 27, 2023Exploring Snowpark and Streamlit for Data Science

I’m Janki Makwana, a Data Scientist at GlobalLogic. I have been working with a major re...

BlogsJuly 18, 2023Manik Jandial

BlogsJuly 18, 2023Manik JandialView on payment industry modernisation: Enablers of change

Welcome to the second part of our two-part series on the evolving payment industry! In ...

- About

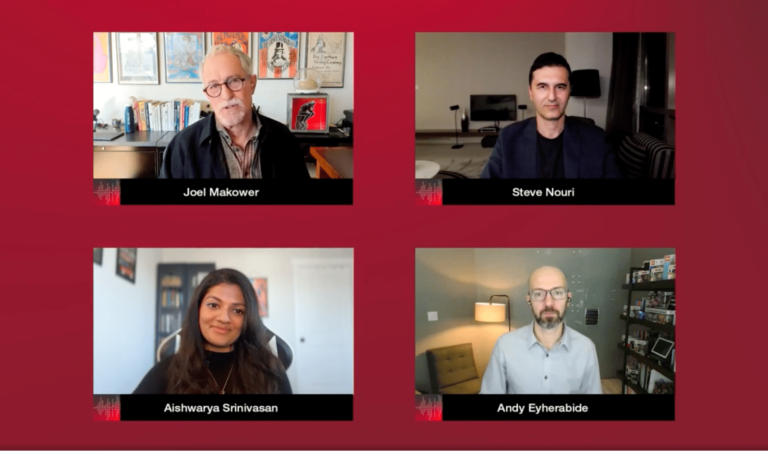

BlogsBlogs19 February 2025GenAI in Action: Lessons from Industry Leaders on Driving Real ROI

BlogsBlogsBlogsBlogsBlogsBlogsGlobalLogic19 August 2024GenAI and my family reunion

GenAI's transformative impact extends far beyond the tech industry, reaching...

BlogsBlogs