- Послуги

Технологічні рішення

Технологічні рішення- Продуктова Стратегія та Дизайн Користувацького ДосвідуОкресліть створені програмним забезпеченням ланцюжки доданої вартості, створіть взаємодії, нові сегменти та пропозиції.

- Цифрова Трансформація БізнесуАдаптуйтесь, еволюціонуйте та зростайте у цифровому світі

- Інтелектуальна інженеріяВикористовуйте дані та АІ для трасформації продуктів, операційної діяльності та покращення бізнес результатів

- Розробка програмного забезпеченняПрискорте вихід на ринок продуктів, платформ та послуг.

- Технологічна МодернізаціяЗбільшуйте ефективність і гнучкість за допомогою модернізованих систем і застосунків.

- Вбудована інженерія та трансформація IT/OTСтворюйте та підтримуйте програмне забезпечення від чіпа до хмари для підключених пристроїв.

- Індустрії

- GlobalLogic VelocityAI

- Блоги

- Про нас

Press ReleaseGlobalLogicJanuary 10, 2025GlobalLogic оголошує про зміну керівництва: Сріні Шанкар ...

САНТА-КЛАРА, Каліфорнія — 10 січня 2025 року — Компанія GlobalLogic Inc., що є частиною...

Press ReleaseGlobalLogicDecember 18, 2024

Press ReleaseGlobalLogicDecember 18, 2024GlobalLogic і Nokia стали партнерами для створення 5G-інновацій

GlobalLogic оголосила про партнерство з Nokia для прискорення впровадження передових 5G...

- Кар’єра

Published on October 28, 2016Автоматизація тестування: як уникнути поширених помилок

ПоділітьсяRelated Insights GlobalLogic17 February 2025

GlobalLogic17 February 2025 GlobalLogic12 February 2025Переглянути всю статистику

GlobalLogic12 February 2025Переглянути всю статистику Oleh Moroz13 August 2024Recommended authorsAssociate Manager, Engineering, GlobalLogicLead Software Engineer, Engineering, GlobalLogicView all authorsSenior Manager, TAG Lead, GlobalLogic

Oleh Moroz13 August 2024Recommended authorsAssociate Manager, Engineering, GlobalLogicLead Software Engineer, Engineering, GlobalLogicView all authorsSenior Manager, TAG Lead, GlobalLogicДавайте створювати інженерний вплив разом

GlobalLogic надає унікальний досвід і експертизу на перетині даних, дизайну та інжинірингу.

Зв'яжіться з намиTesting-as-a-ServiceCross-IndustryDifferent people have different expectations from the implementation of automation. It often happens that after some time the initial expectations are not met, because a rather large investment in automation does not bring benefits. Let’s try to figure out why this happens and how to prevent the repetition of common mistakes.To be or not to be

You definitely need test automation if:

- You have a project lasting a year or more. The number of tests that need to be run as part of regression is growing rapidly, and the routine needs to be eradicated first. Testers should test, not pass test cases.

- You have a distributed development team or a team of more than two developers. The developer must be sure that his changes do not break someone else’s code. Without auto-tests, he will find out about it in the best case after a day or two, in the worst – from users.

- You support several versions of the product and release patches and service packs for each of them. Everything is clear here: testing on different configurations is routine, and it should be eradicated.

- You are developing a service whose main task is the processing and transformation of various data. Manually entering data into the system and visually analyzing the results, or sending requests and analyzing the responses, is not at all something that living people should do every day.

- You are agile with short iterations and frequent releases. There is a catastrophic lack of time for a manual regression run as part of a sprint, and it is necessary to know that everything is in order in those places where the testers did not climb.

If your project is not like this, then you most likely do not need to fill your head with thoughts about automation.

Why auto-tests are needed at all

Any automation is needed to free a person from routine work. Automation of testing — including. However, there is also a misconception that auto-tests should completely replace the manual work of the tester, and scripts should test the product. This, of course, is nonsense. No script is yet able to replace a living person. No script can test yet. All the script can do is to repeat actions programmed by a person and signal if something went wrong, i.e. make simple checks. And the script knows how to do it quickly and without human intervention.

This property is used to obtain information about any change in the quality of the product being tested faster than a human can do. In addition to speed, there are, of course, other requirements that add up to the effectiveness of automation: the completeness of the test coverage, the clarity and reliability of the results, the costs of development and maintenance, the convenience of launching and analyzing the results, etc. The main performance indicators are speed, quality coverage and cost. You need to rely on them.

Why expectations are not met

There are quite a few reasons why automation may not live up to expectations. And all of them in one way or another are related to wrongly made decisions in engineering or management areas, and sometimes in both at the same time.

Management decisions are a topic for a separate article, but for now I will simply highlight the most important mistakes without explanation:

- An attempt to save on specialists in the field of automation. If a manager thinks that he can send his testers to Selenium courses and they will automate for him, then he is wrong.

- Attempting to implement automation without a well-thought-out strategy and planning, such as “Let’s implement, and we’ll see.” Or even worse, automation for the sake of automation: “The neighbor has it, and I need it; why is not clear, but it is necessary.”

- Too late start: the tests start to be automated only when the testers are completely bent over.

- Believing that it’s cheaper to hire students to do the regression by hand (i.e. not do automation at all, even though there are all the signs of a project that needs it).

By engineering decisions, I understand such decisions that engineers make during the development and implementation of an automation strategy. This is a choice of tools, types of testing, frameworks, etc.

Let’s consider some points from an engineering point of view.

Why automating only UI tests is evil

The most common mistake is the decision to automate tests exclusively through a graphical interface. Such a decision does not seem bad at all at the time of its adoption. Sometimes it even solves some tasks for quite a long time. Sometimes it can be quite enough if the product is already in the support stage and is no longer being developed. But, as a rule, in the long run, for projects that are actively developing, this is not the best approach.

UI tests are what testers do, it’s the natural way to test an app. Moreover, it is a simulation of how users will interact with the application. It would seem that this is the ideal and only correct option, and it is this that should be used in automation in the first place. But there is, as they say, a nuance:

— UI tests are unstable;

– UI tests are slow.

They are unstable because the tests depend on the “layout” of the application interface. If you change the order of buttons on the screen or add/remove some element, the tests may break. The automation tool won’t be able to find the right element or will press the wrong button entirely, and the logic of the test will change.

The more tests you have, the more time you have to spend fixing and maintaining them. As a result, the reliability of the results of such tests decreases due to frequent false positives. At some point, all the time of the automator begins to be spent on repairing destroyed scripts, nothing new is created.

These tests are slow because the program interface is slow, it requires redrawing, reloading resources, waiting for some data to appear, etc. The test script spends most of its time waiting. And waiting is a waste. Also, the test may fail because it’s already trying to use an element that hasn’t had time to render on a slow UI.

When running UI scripts takes two days, even if independent groups of tests are run simultaneously on several servers, it is very difficult to use such automation as a quality indicator in daily practice.

What to do?

We stabilize. In fact, I deliberately exaggerated the problem of instability, because it is easy to solve, but often automatizers do not even try to solve it.

The first thing to do in any case is to agree with the developers that they do not forget to prescribe unique attributes for the elements, by which the automation tool can accurately identify them. That is, you need to abandon the five-story xPath-expressions or CSS-selectors as much as possible and, if possible, use unique id, name, etc. everywhere. This should be clearly spelled out in the development guides and be one of the items in the definition of done for developers. Then even in the case of major changes to the user interface, you have a chance to experience a slight fright.

In response, you can hear the excuse that this is overhead for developers. Maybe so, but they only need to do it once and forget it forever. On the other hand, it is a real saving of hundreds of hours of time for automatizers.

The application being tested must allow for self-testing. If this is not possible, then the application must be modified or discarded.

In addition, it will not be superfluous to teach the automation tool to wait for a convenient moment when the element becomes available for interaction or to first use something like Selenide, where such a problem does not exist by design.

We are speeding up. If everything is quite simple with instability, then the problem of slow tests should be solved comprehensively, since it affects the development process as a whole.

The first and easiest thing that can speed up the process is to deploy the application and run the tests on a faster hardware, avoid situations where the interaction between the test and the application is affected by network delays, etc. That is, to “solve” the problem at the expense of iron and the architecture of the test stand. This alone can provide significant savings over time, two or more times.

The second thing to do is to include the possibility of independent and parallel execution in the test framework and design of test cases from the very beginning. The parallelism of test runs allows you to significantly reduce the execution time. However, there are also limitations here. First, the logic of the test program does not always allow testing it in several streams. Such situations are quite specific and rare, but they do happen. Secondly, here, too, everything depends on the iron: it is impossible to parallelize to infinity.

The third and most radical is to create as few UI tests as possible. Fewer tests – we get the results of their execution earlier.

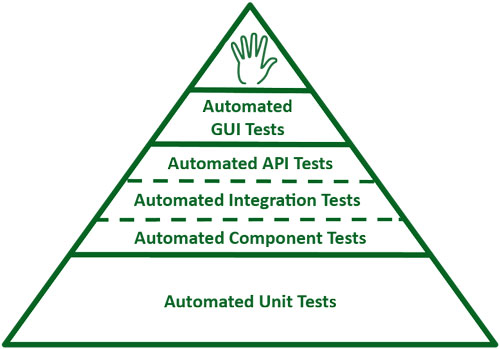

Testing pyramid

Everyone remembers the well-known testing pyramid?

A pyramid is a very convenient metaphor, it clearly shows the desired number of automated tests for each of the levels of the system architecture. There should be a lot of low-level unit tests and very few high-level UI tests. The question is, why exactly and why is everyone messing around with this pyramid?

Everything is simple here. Let’s recall what the process of finding and fixing a problem in an application usually looks like when it is tested manually. First, the developer makes new changes to the code. The tester is waiting for the assembly and deployment of the new build to the test bench. The tester conducts testing, finds a problem and creates a ticket in the bug tracking system. The developer immediately responds to this ticket and fixes the problem. These are new changes to the code, and then build, deploy, retest again. If everything is ok, the ticket is closed. The time from the detection of the problem to its correction ranges from several hours to several days or even weeks.

What happens when the same test is automated through the UI? You also have to wait until the new version is compiled and deployed, then wait until the tests are completed. Then you need to analyze the results of the run. If there were problems, determine where these problems arose: in the test itself or in the application. Then you need to push the fallen test with your hands again to understand for sure what the problem is. Start a ticket, wait for it to be recorded, restart the test, make sure that the test is now green, close the ticket. Again, from a few hours to a few days/weeks. The only plus is that this test is automatic, and while it is running, the tester is testing something else.

It’s a different story when there are automated tests that use an API to communicate with the application’s backend. There are already interesting options here:

- Tests are run on a fully deployed application with all external systems. Compared to pure UI tests, the time of execution and analysis of the results is greatly reduced, since there are far fewer false positives. Everything else is the same as in UI tests.

- Tests after building the build, but without deployment to the test bench; stubs for external systems are used. Tests are run in the context of a build build, problems found often do not require the creation of tickets, because the run is carried out by a developer who makes changes to the code, and immediately fixes them. Here, the gain in speed between detecting and fixing the problem is simply huge.

- Well, of course, the “cream” is unit and component auto-tests. They do not require the assembly of the entire project, they are launched immediately after the compilation of the module without leaving your favorite IDE, the response is instant. The time from making changes to fixing possible problems is measured in minutes.

It is obvious that the lower you go down the pyramid, the faster the corresponding auto-tests will be performed. This means that there is an opportunity to run many more tests at the same time. Accordingly, the lower the level, the more effective tests can be created on it in terms of response time and coverage.

A comprehensive approach

It is important to understand that unit tests test the code, that is, they give the developer confidence that a part of his code works as intended and, most importantly, that his code does not break the logic of the work of his colleague’s code. This is because the colleague’s code is also covered by unit tests, and these tests are run by the developer before committing to the repository.

UI tests test the entire system, namely what the user will use. It is critical to have such tests available.

There is one general recommendation here: you need to have all types of self-tests in the right amount at each of the levels. Then there is an opportunity to get an effective return from such tests.

Test Driven Development is not even a recommendation anymore, it should come from the developer by default. Only then can difficulties during refactoring and typical development problems in large teams be avoided.

At the level of API tests, you need to drop all the functional tests that the testers conducted during the sprint. Negative, positive, combinatorial, etc. In this way, a fast and stable regression test suite is created.

Only acceptance tests, so-called Happy Path or End-To-End scenarios, which are shown during the demo, are brought to the level of UI tests. This applies to both web and mobile applications.

Thus, if you simply follow the recommendations of the pyramid, you can get very fast tests and excellent coverage at normal development and maintenance costs.

Resume

Not all projects need full-fledged automation: some may just need helper scripts to make life easier for testers. But when we are dealing with a project that is developing and will develop for a long time, in which many people are involved and there is a full-fledged testing department, then automation is indispensable.

Excellent test automation can be created if, from the very beginning, the right decisions on the development of auto-tests are made at each of the levels of the system architecture. Only this decision can be the key to success.

Yaroslav Pernerovsky, Quality Assurance Consultant, GlobalLogic