- Послуги

Технологічні рішення

Технологічні рішення- Продуктова Стратегія та Дизайн Користувацького ДосвідуОкресліть створені програмним забезпеченням ланцюжки доданої вартості, створіть взаємодії, нові сегменти та пропозиції.

- Цифрова Трансформація БізнесуАдаптуйтесь, еволюціонуйте та зростайте у цифровому світі

- Інтелектуальна інженеріяВикористовуйте дані та АІ для трасформації продуктів, операційної діяльності та покращення бізнес результатів

- Розробка програмного забезпеченняПрискорте вихід на ринок продуктів, платформ та послуг.

- Технологічна МодернізаціяЗбільшуйте ефективність і гнучкість за допомогою модернізованих систем і застосунків.

- Вбудована інженерія та трансформація IT/OTСтворюйте та підтримуйте програмне забезпечення від чіпа до хмари для підключених пристроїв.

- Індустрії

- GlobalLogic VelocityAI

- Блоги

- Про нас

Press ReleaseGlobalLogicJanuary 10, 2025GlobalLogic оголошує про зміну керівництва: Сріні Шанкар ...

САНТА-КЛАРА, Каліфорнія — 10 січня 2025 року — Компанія GlobalLogic Inc., що є частиною...

Press ReleaseGlobalLogicDecember 18, 2024

Press ReleaseGlobalLogicDecember 18, 2024GlobalLogic і Nokia стали партнерами для створення 5G-інновацій

GlobalLogic оголосила про партнерство з Nokia для прискорення впровадження передових 5G...

- Кар’єра

Published on October 16, 2020Шлях до BigData. Частина перша. З чого почати?

ПоділітьсяRelated Insights GlobalLogic17 February 2025

GlobalLogic17 February 2025 GlobalLogic12 February 2025Переглянути всю статистику

GlobalLogic12 February 2025Переглянути всю статистику Oleh Moroz13 August 2024Recommended authorsAssociate Manager, Engineering, GlobalLogicLead Software Engineer, Engineering, GlobalLogicView all authorsSenior Manager, TAG Lead, GlobalLogic

Oleh Moroz13 August 2024Recommended authorsAssociate Manager, Engineering, GlobalLogicLead Software Engineer, Engineering, GlobalLogicView all authorsSenior Manager, TAG Lead, GlobalLogicДавайте створювати інженерний вплив разом

GlobalLogic надає унікальний досвід і експертизу на перетині даних, дизайну та інжинірингу.

Зв'яжіться з намиAnalyticsData EngineeringCross-IndustryAuthor: Oleksandr Fedirko, Solution Architect, Trainer, GlobalLogic Ukraine

My name is Oleksandr Fedirko. For over 5 years, I have been designing and architecting BigData solutions that allow you to obtain qualitatively new knowledge through comprehensive analysis of big data. I am one of the leaders of the BigData practice at GlobalLogic and am responsible for the development of this expertise in the Central and Eastern Europe region. I am a trainer for courses within GlobalLogic and an active speaker at many industry forums and conferences on BigData and analytics. Among the technologies I specialize in are: multi-threaded data processing, relational databases, NoSQL, data warehouses, query processing systems.

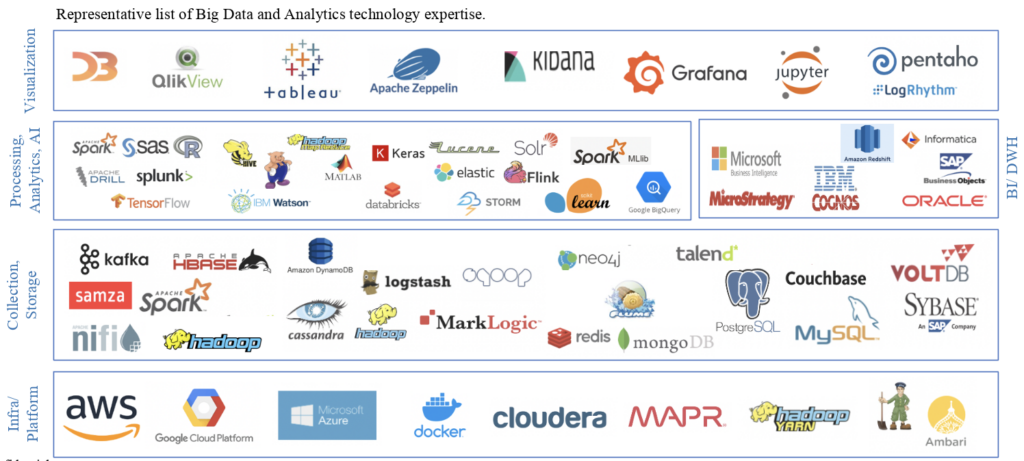

Technological jungle

If you start Googling “top technology trends,” you will probably see BigData technologies such as data integration, data engineering, and big data among the search results. And it is not surprising, because BigData is actually one of the most popular technologies in the IT market and plays a key role in many business industries on the path to digital transformation.

If you don’t want to be left out of this powerful trend in the world of technology, you’ve probably already started studying or wondering where to start on the path to mastering BigData technologies. But without a guide on this path, it can feel like you’re wading through a jungle.

So, developers, engineers, and architects, I’ve laid out some routes for you so you don’t get lost in these thickets.

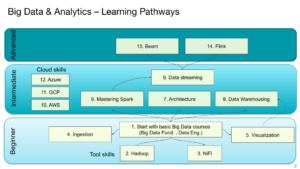

I organized the entire path into 14 steps, divided into 3 levels, from Easy to Hard.

Level 1 is for beginners who are just getting interested in BigData.

This is the Easy level, using gaming terminology. Five steps to help you form a basic understanding of the topic.

- Start with one of the basic Big Data Fundamentals courses, which will give you a general understanding of Big Data and data processing toolkits in the Cloud or on-premises. Then you should dive deeper and learn some of the most popular tools.

- Hadoop is the most popular free software platform and framework for organizing distributed storage and processing of large data sets.

- NiFi is one of the simplest tools for automating data flow between software systems.

- Ingestion. It’s time to understand exactly how data gets into the platform. Master the process of receiving, entering, and processing data for further use or storage.

- The basics of data visualization are essential for every Big Data engineer, so don’t neglect this skill.

Level 2 — Intermediate. You are now ready for batch data processing.

This can already be called Medium Level . Here you have 7 more levels to master.

- It’s time to master Spark. It’s a high-performance engine for processing data stored in a Hadoop cluster. Compared to the MapReduce engine provided by Hadoop, Spark provides 100 times the performance when processing data in memory and 10 times the performance when placing data on disk. Data processing applications are most often written in Scala and Python.

- Next, you will need to familiarize yourself with the architecture of BigData projects.

- And then – figure out how to organize data warehouses.

- Data streaming. Most often done using Spark in real-time or near-real-time.

- You can’t do without cloud technologies anymore, as customers increasingly prefer cloud solutions to avoid wasting resources on DevOps setup and supporting local systems. Start, for example, with AWS, Amazon’s commercial cloud computing platform.

- Google Cloud Platform is a set of cloud services that run on the same infrastructure that Google uses for its consumer products, such as Google Search and YouTube. In addition to management tools, it also provides a range of modular cloud services for computing, data storage, data analytics, and machine learning.

- Azure is a cloud platform and infrastructure from Microsoft designed for cloud computing application developers and designed to simplify the process of creating online applications.

Level 3 — Advanced. Here you should improve your streaming skills.

At this stage, two final “bosses” await you.

- Apache Flink is an open source framework for implementing stream processing from the Apache Software Foundation. Flink supports data stream programming in both parallel and pipelined modes. In pipelined mode, it allows for the implementation of task sequencing and task flow. Flink also supports iterative algorithms.

- Apache Beam is a unified, open-source programming model for defining and executing data processing pipelines, including ETL, batch, and streaming processing.

Below I have compiled a list of online courses with links that I recommend starting with. These are, in my opinion, the best courses from the most popular platforms – Coursera, Udacity and Udemy. A full list of links to all blocks is available to GlobalLogic consultants. Most of these courses are paid, but GlobalLogic Education has a partnership with Udemy, so our consultants and BigData practitioners have the opportunity to take them on this platform for free.

Level 1 — Beginner

- Big Data is a whole specialization, you can take separate courses

- Hands-On Hadoop – the course reveals the main components of the Hadoop ecosystem and their purpose. High rating, practical tasks.

- Introduction to Apache NiFi – a brief description of typical NiFi usage scenarios.

- Data Visualization in Tableau – explores the topic of using Tableau as one of the most popular visualization tools.

Level 2 — Intermediate

- Spark Scala – The course focuses on using Spark as a batch processing tool.

- Spark Python – practical skills in using Spark with the Python programming language.

- BI & DWH is a good excursion into classic data warehouse modeling.

- Data Streaming – the course provides the basics of data streaming processing and talks about typical usage scenarios.

Level 3 – Advanced

- Apache Beam – the course teaches a unified approach to data processing using the Apache Beam framework.

- Apache Flink – practical skills with one of the most popular technologies for real-time streaming data processing.

GlobalLogic also has its own program: GL BigData ProCamp .

We are holding it for the second year in a row. The goal is to develop Big Data expertise at GlobalLogic and train and attract new engineers. Recruitment is currently underway, so if you want to join the next group, details and the registration form are at the link.

ProCamp will be useful for those who have already completed a few basic online courses and tried something in practice. We will analyze the following topics:

- BigData Fundamentals

- Data Warehousing Fundamentals

- Apache NiFi

- Kafka as Message Broker

- Hadoop Fundamentals

- Apache Spark

- Orchestrations: Oozie, AirFlow

- Google Cloud

That’s it for today. Next week, we’ll continue our journey and talk about GlobalLogic projects using Big Data, and talk more about the special GL BigData ProCamp .

Don’t switch!