-

-

-

-

URL copied!

Introduction

Over the last few decades, huge amounts of data have been generated from different types of sources. Enterprises increasingly want to utilize the new age data paradigms to drive better decisions and actions. It provides them an opportunity to increase efficiencies, push newer ways of doing business, and optimize spends.

However, a lot of companies are struggling with data issues because of the advanced technological stacks involved and the complex data pipelines that keep changing due to newer business goals. It has become imperative to leverage best practices for implementing data quality and validation techniques to ensure that data remains usable for further analytics to derive insights.

In this blog, we look at the data quality requirements and the core design for a solution that can help enterprises perform data quality and validation in a flexible, modular, and scalable way.

Data Quality Requirements

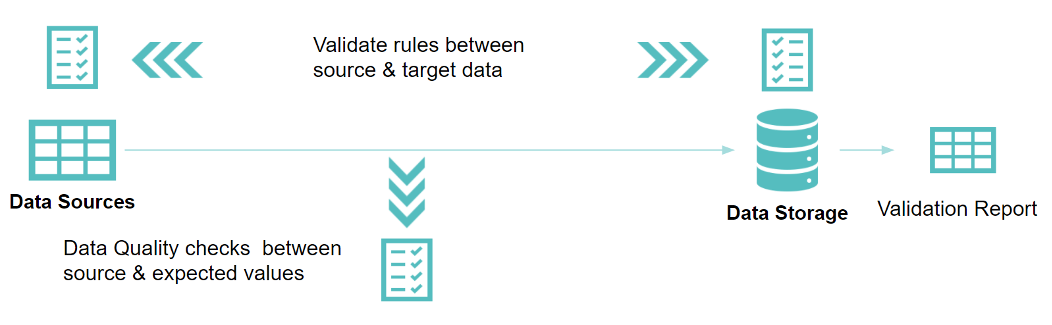

A data platform integrates data from a variety of sources to provide processed and cleansed datasets that comply with quality and regulatory needs to analytical systems so that insights can be generated from them. The data being moved from the data sources to the storage layers need to be validated, either as part of the data integration pipeline itself, or independently compared between the source and the sink.

Below are some of the requirements that a data quality and validation solution needs to address:

- Check Data Completeness: Validate the results between the source and target data sources, such as:

- Compare row count across columns

- Compare output of column value aggregation

- Compare a subset of data without hashing or full dataset with SHA256 hashing of all columns

- Compare profiling statistics like min, max, mean, quantiles

- Check Schema/Metadata: Validate results across the source and target, or between the source and an expected value.

- Check column names, data type, ordering or positions of columns, data length

- Check Data Transformations: Validate the intermediate step of actual values with the expected values.

- Check custom data transformation rules

- Check data quality, such as whether data is in range, in a reference lookup, domain value comparison, or row count matches a particular value

- Check data integrity constraints like not null, uniqueness, no negative value

- Data Security Validation: Validate different aspects of security, such as:

- Verify if data is compliant as per regulations and policies applicable

- Identify security vulnerabilities in underlying infrastructure, tools leveraged, or code that can impact data

- Identify issues at the access, authorization, and authentication level

- Conduct threat modeling and testing data in rest and transit

- Data Pipeline Validation: Verify pipeline related aspects such as whether:

- If the expected source data is picked

- Requisite operations in the pipeline are as per requirements (e.g., aggregation, transformations, cleansing)

- The data is being delivered to the target

- Code & Pipelines Deployment Validation: Validate that the pipelines with code have been deployed correctly in the requisite environment

- Scale seamlessly for large data volumes

- Support orchestration and scheduling of validation jobs

- Provide a low code approach to define data sources and configure validation rules

- Generate a report that provides details about the validation results across datasets for the configured rules

High-Level Overview of the Solution

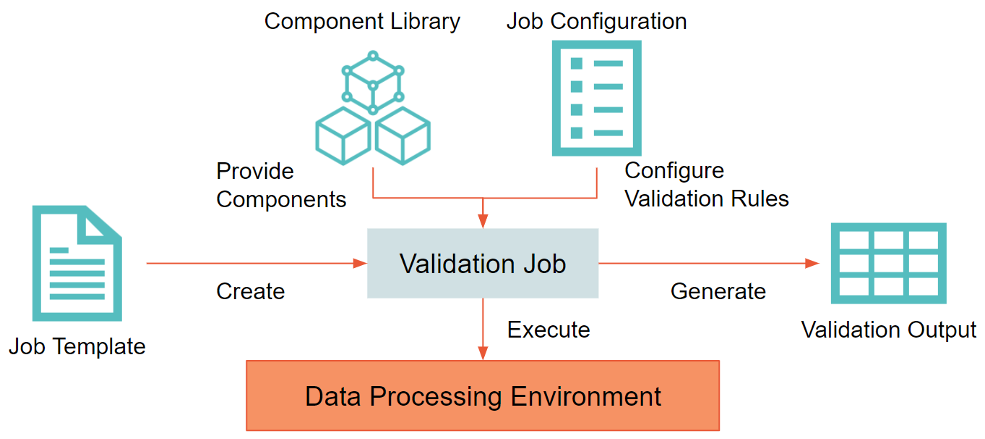

Below is a high-level design for a data quality and validation solution that addresses the above-mentioned requirements.

- Component Library: Generalize the commonly used validation rules as a stand-alone component that can be provided out-of-box through a pre-defined Component Library.

- Components: For advanced users or for certain scenarios, custom validation rules might be required. These can be supported through an extensible framework that supports the addition of new components to the existing library.

- Job Configuration: A typical QA tester prefers a low-code way of configuring the validation jobs without having to write code. A JSON or YAML-based configuration can be used to define the data sources and configure the different validation rules.

- Data Processing Engine: The solution needs to be able to scale to handle large volumes of data. A big data processing framework such as Apache Spark can be used to build the base framework. This will enable the job to be deployed and executed in any data processing environment that supports Spark.

- Job Templates: Pre-defined job templates and customizable job templates can provide a standardized way of defining validation jobs.

- Validation Output: The output of the job should be a consistent validation report that provides a summary of the validation rules output across the data sources configured.

Accelerate Your Own Data Quality Journey

At GlobalLogic, we are working on a similar approach as part of our GlobalLogic Data Platform. The platform includes a Data Quality and Validation Accelerator that provides a modular and scalable framework that can be deployed on cloud serverless Spark environments to validate a variety of sources.

We regularly work with our clients to help them with their data journeys. Tell us about your needs through the below contact form, and we would be happy to talk to you about next steps.

Top Insights

If You Build Products, You Should Be Using...

Digital TransformationTesting and QAManufacturing and IndustrialPredictive Hiring (Or How to Make an Offer...

Project ManagementTop Authors

Blog Categories

Let’s Work Together

Related Content

The Transition from Third-Party to First-Party Data

Introduction Data is the key to understanding behavior, patterns, and insights. Without data, it is incredibly complicated to gain the knowledge to decide the right actions to meet objectives. Therefore, collecting the right data is a crucial aspect of a data and analytics platform. But recent events show that the way organizations collect customer … Continue reading Designing a Scalable and Modular Data Quality and Validation Solution →

Learn More

Share this page:

-

-

-

-

URL copied!