Welcome to the next frontier of the digital era, where virtual reality transcends boundaries and the metaverse emerges as an immersive and interconnected virtual world. Everyone involved in digital product engineering finds ourselves at the precipice of a transformative moment. The metaverse has the potential to revolutionize the way we conduct financial transactions, interact with customers, and establish trust in an increasingly virtual world.

However, venturing into the metaverse comes with its own unique set of challenges, particularly for the banking, financial services and insurance sector. We learned a great deal about how those challenges are impacting executives at some of the world’s leading financial institutions in a recent digital boardroom event hosted by the Global CIO Institute and GlobalLogic.

‘The Wild West: Regulation In The Metaverse,’ was moderated by Dr. Jim Walsh, our CTO here at GlobalLogic. It was the first of three thought provoking digital boardrooms we’re hosting to explore the issues driving – and impeding – finance product innovation in the metaverse. He was joined by nine executives spanning enterprise architecture, information security, technology risk, IT integration, interactive media and more, from some of the world’s largest financial institutions.

In this article, we delve into the main obstacles these companies are facing as they prepare to do business in this new realm: regulation, identity verification and management, creating an ecosystem of trust, and governance structures that will support law and order in the metaverse.

1. Regulating the Next Wild, Wild West for Finance

Experts have raised concerns over the lack of regulatory oversight within the metaverse, citing that users are at risk of becoming victims to real world harms such as fraud, especially with its overreliance on decentralized cryptocurrencies. The EU Commission is working on a new set of standards for virtual worlds, for which it received public feedback in May 2023. The World Economic Forum is calling for the rest of the world to follow suit and regulate digital identities within the Metaverse.

This is the backdrop against which we kicked off our roundtable discussion on regulation in the metaverse.

And of course, we cannot talk about regulation in the metaverse without first discussing whether it’s even needed at all, and to what extent.

Recommended reading: Fintech in the Metaverse: Exploring the Possibilities

The metaverse is not new, as one participant pointed out; what’s happening now is that technologies are colliding to create new business opportunities. We’re seeing more and more examples of the Internet being regulated, and now must turn our attention to what impact those regulations may have on the emerging metaverse. Will it slow adoption or change how people interact?

“People have been waking up to why it’s been important to have some limitations around the complete freeness of the internet of the ‘90s,” a panelist noted. “Regulations must evolve in a way that the value of the metaverse is not compromised.”

Another noted that anywhere commerce and the movement of currency can impact people’s lives in potentially negative ways, the space must be regulated. In order to maintain law and order in the metaverse, we’ll need a way of connecting metaverse identities to real people. And so another major theme emerged.

2. Identify Verification and Management in the Metaverse

Panelists across the board agreed that identity verification and management is a prerequisite to mainstream finance consumer adoption of the metaverse as a place to do business. Banking, insurance, and investment companies will therefore be looking for these solutions to emerge before entering the metaverse as a market for their products and services.

Look at cryptocurrency as an example, one participant recommended. “Crypto was anonymous, decentralized and self-regulated – but those days are over. Look at the token scams that have happened in crypto. That’s not a community capable of self-regulation.”

If the metaverse is going to scale, they said, we need regulation – and anonymity cannot persist.

Another attendee suggested we look to Roblox and Second Life as early examples of closed worlds with identity verification solutions. Second Life has long required that users from specific countries or states verify their real identity in order to use some areas of the platform, and had to go state-by-state to get the regulatory approvals to allow users to withdraw currency. For its part, Roblox introduced age and identity verification in 2021. These were closed worlds where you could be whatever you want, but identity was non-transferable.

The metaverse, on the other hand, is a place where you can move through worlds, transfer assets and money from virtual to real worlds, etc. Anti-money laundering and identity management will need to catch up before it’s a space consumers and the companies that serve them can safely do business.

3. Trust & Safety in the Metaverse

Closely related to identity is the issue of trust in the metaverse, and it’s an impactful one for finance brands and the customers they serve. There must be value and reasons for people to show up and interact, and the metaverse cannot be a hostile, openly manipulated environment if we’re going to see financial transactions happening at scale.

Already, one participant noted, societal rules are being brought into the Metaverse. You don’t need physical contact to have altercations and conflict; tweets and Facebook comments can cause harm in real ways, and we need to consider the impacts of damaging behaviors in the highly immersive metaverse. Platforms create codes of conduct, but those expectations don’t persist across the breadth of a user’s experience in the metaverse.

Another pointed out that we don’t even have customer identity or online safety solutions that work perfectly in Web 2 and are carrying these flaws we already know about into Web 3. Credit card hacking and data breaches involving online credit card purchases have plagued e-commerce since its inception.

Even so, the level of concern over privacy and safety issues varies wildly among consumers. Some will be more comfortable with a level of risk than others.

4. Metaverse Governance and Mapping Virtual Behavior to Real-World Consequence

Dr. Walsh asked of the group, will we have government in the metaverse, or will it be self-governing?

On this, one participant believes that regulating blockchain will sort out much of what needs to happen for the metaverse. The principles of blockchain are self-preservation of the community and consensus, they said, but that’s going to take a while to produce in the metaverse.

Recommended reading: Guide to Blockchain Technology Business Benefits & Use Cases

Another kicked off a fascinating discussion around the extent to which AI might “police” the metaverse. Artificial intelligence is already at work on Web 2.0 platforms in centralized content moderation and enforcing rules against harassment. Imagine metaverse police bots out in full force, patrolling for noncompliance. We’ll need this for the self-preservation of the metaverse, the attendee said.

Participants seemed to agree that when what’s happening in the metaverse has real-life consequences, regulation must reflect that. Legit business cannot happen in a space where financial crimes happen with impunity.

However, who will be responsible for creating and enforcing those regulations remains to be seen. In a space with no geographical boundaries, which real-world governments or organizations will define what bad behavior is?

“If I’m in the European metaverse, maybe I have a smoking room and people drink at 15,” one participant noted with a wry smile. “That’s okay in some parts of the world, but it’s very bad behavior in others.”

In the metaverse as a siloed group of worlds with individual governance and regulation, financial institutions may have to account for varying currency rates and conversion, digital asset ownership and portability, and other issues. Or, we may see the consolidation of spaces and more streamlined regulations than in the real world and Web 2.0. The jury is out.

Reflecting Back & Looking Ahead

For finance brands, the sheer volume of work to be done before entering the metaverse in a transactional way seems overwhelming. “The amount of things we have to build on the very basic stack we have is staggering,” one participant said.

However, we will bring a number of things from the real, physical world into the metaverse because we need those as humans. These range from our creature comforts – a comfortable sofa, a beautiful view – to ideals such as trust, and law and order, the nuts and bolts of a functioning society. How those real-world ideas and guiding principles adapt to the metaverse remains to be seen.

We’re currently in the first phase of the metaverse, where individual worlds define good and bad behavior, and regulate the use of their platforms. The second stage will be interoperability by choice. For example, Facebook and Microsoft could agree you can have an identity move between their platforms, and in that case those entities will dictate what behaviors are acceptable or not in their shared space.

Eventually, people should be able to seamlessly live their life in the digital metaverse. That’s the far future state, where you can go to a mall in the metaverse, wander and explore, and make choices about which stores you want to visit. By the time we get there, we’ll need fully implemented ethics, regulations, and laws to foster an ecosystem of trust – one in which customers feel comfortable executing financial transactions en masse. Large organizations will need to see these regulations and governance in place before they can move beyond experimentation to new lines of business.

The technology is new, but the concepts are not. Past experience tells us there are things we need to get into place before we’ll see mass adoption and financial transactions happening at scale in the metaverse.

Regardless of how one might think of having centralized controls thrust upon them, the vast majority of consumers will not do financial business in an ecosystem without trust. Regulation is one of the key signals financial institutions, banks, insurance providers and others in their space need to monitor, to determine when the metaverse can move from the future planning horizon to an exciting opportunity for near-term business growth.

In the meantime, business leaders can work on establishing the internal structure and support for working cross-functionally with legal and governance functions to stay abreast of regulatory changes and ensure compliance. This is also a good time to explore opportunities where the metaverse could help organizations overcome compliance obstacles, and imagine future possibilities for working with regulators to combat financial crime within the metaverse.

There’s much groundwork to be laid, and it will take a collaborative effort to build the ecosystem of trust financial organizations and customers need to conduct transactions safely and responsibly in the metaverse.

Want to learn more?

- Explore our banking, financial services, and insurance solutions

- Read how a leading retail bank fought payments fraud by leveraging big data and AIOps

See how a UK bank improved CX for its 14 million customers with AIOps

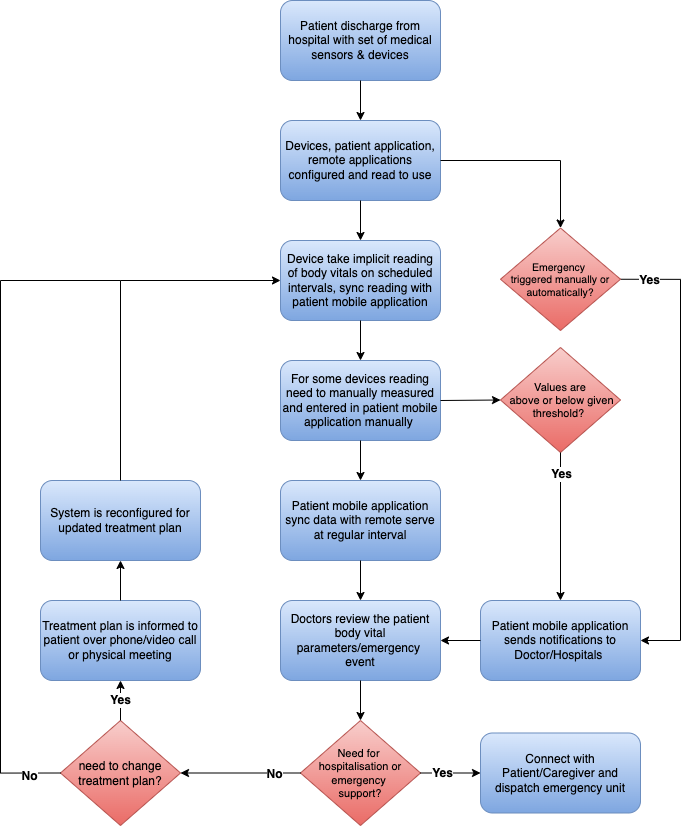

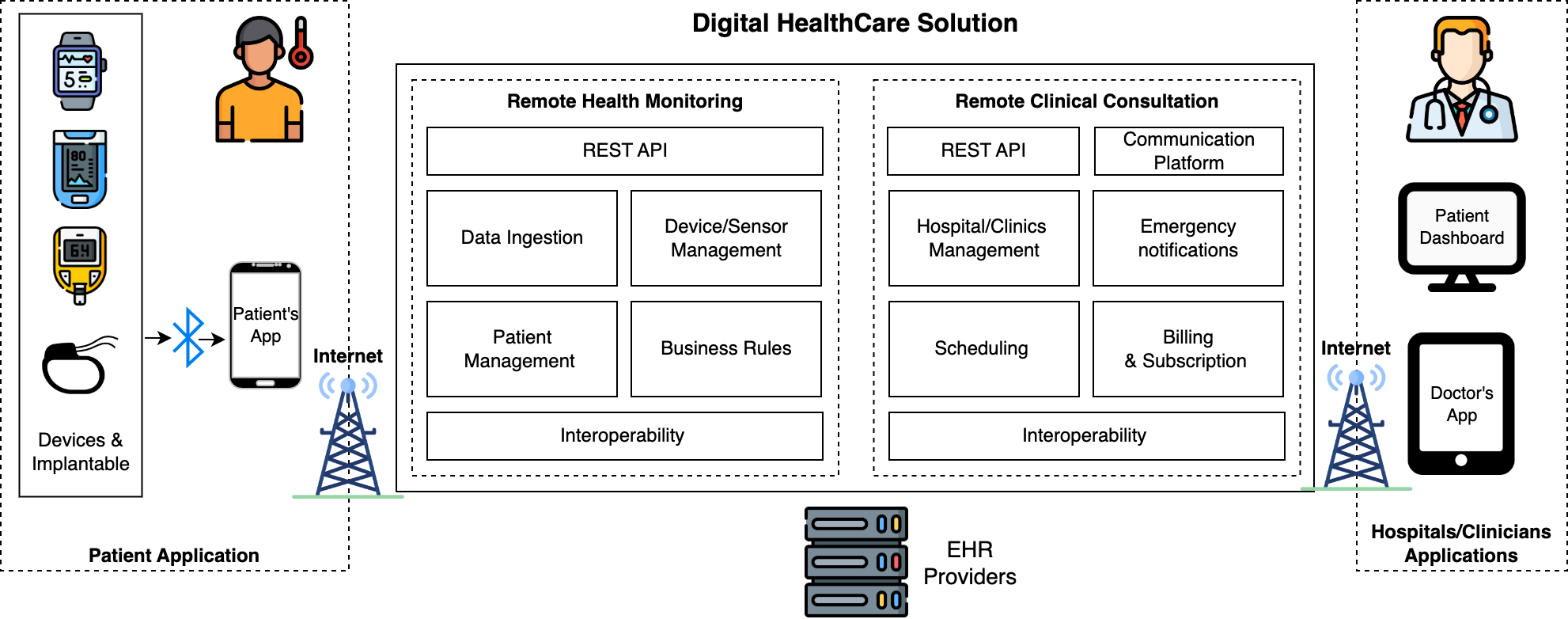

There are many use cases already in practice today, and innovations in the space are opening up new opportunities for remote health monitoring each day. Here are several more ways this technology can be used to benefit patients and improve healthcare outcomes.

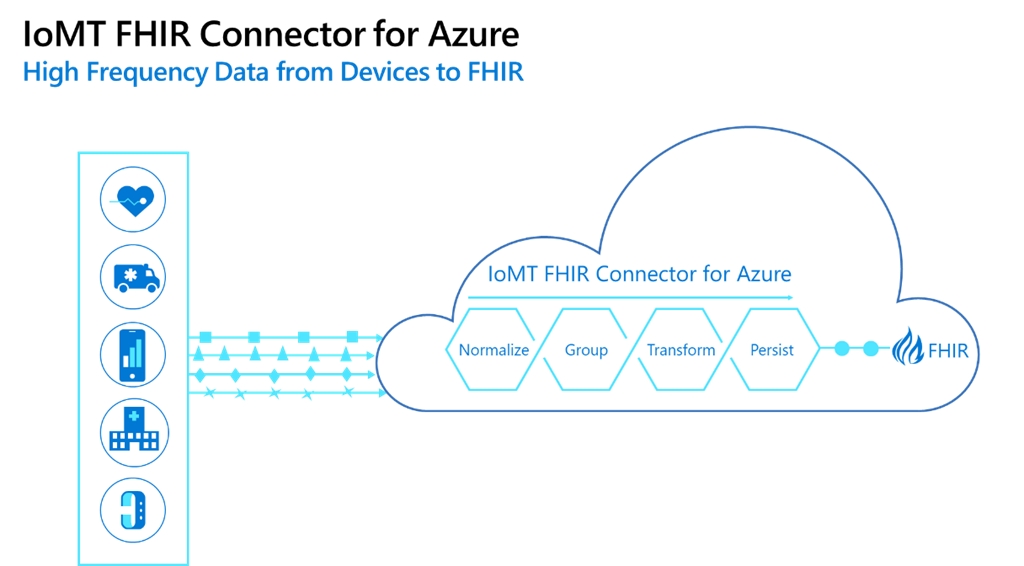

There are many use cases already in practice today, and innovations in the space are opening up new opportunities for remote health monitoring each day. Here are several more ways this technology can be used to benefit patients and improve healthcare outcomes. Example: The IoMT FHIR Connector for Azure

Example: The IoMT FHIR Connector for Azure