This blog is taken from an interview conducted by Omniscope.

Could you please tell us about your background and current focus in the cloud space?

I am associated with GlobalLogic Inc for last 9 years and have been fortunate to work on the most complex and interesting breeds of solutions across – telecom, retail, commerce, social, mobile, stocks/trading, geospatial analytics, image processing, project management (as a domain) domains. In the past I have worked for Microsoft and HCL Technologies.

The background and transitioning into a cloud-fanatic has been pretty logical from the temporal perspective. As a core application developer and solutions architect, the right hosting strategy was one of the important aspects while working on the deployment architectures. Around 8-9 years back (pretty historical from technology perspective) the shared/dedicated hosting with the third party providers had been a prime choice to do away with the high maintenance in-house deployment strategies. But it was still meticulously involving in staying on top of various quality attributes viz. availability, scalability, reliability, maintainability, security etc.

This was more of a data center approach and the cloud was still in inception. Around the same time, the emergence of the cloud was pretty evident from the heavy focus it was getting early on from Amazon and Microsoft. Being closer to the Microsoft stack, I personally started with some interesting POCs in GlobalLogic’s Innovation and Technology Group on SQL Azure. Soon realizing the value, we constituted a study/POC group where we started envisioning and designing some interesting ideas into solutions based on cloud. It was still more around the Microsoft implementation of the cloud but the group definitely dissected the cloud solutioning in depth from the best practices and application development patterns perspective.

We also got into some large-scale cloud based products and expanded the scope to the AWS. The domain was application development over and above PaaS based clouds. It was equally interesting to get into the OpenStack working for one of the biggest OpenStack contributors, where I got a chance to work on extending the PaaS layer. It was one step deeper down into the cloud stack (SaaS, PaaS, IaaS, Virtualization, OS) where along with the platform services; it was more of infrastructure and virtualization as well.

The journey into the non-Microsoft non- proprietary Linux/Python based open-source development was an eye opener indeed. Over the same timeline it was equally rewarding to participate in the organization activities around cloud group and the advisory activities across the organization.

From the organization perspective there are some interesting cloud-centric use cases across the stack that I am a part of, along with the consultancy and development on the cloud based product development and advisory activities. The cloud computing and the taxonomy around it is not a special competency these days, it is more of a default approach; it is an UBER standard to which all the solutions have to comply to and if there are people and solutions who don’t, they should be ready to embrace extinction.

Which companies do you think are the leaders in the cloud space currently? What makes them tick with the customers?

The landscape is huge and categorized into Scope (public to private to hybrid) and Abstraction (IaaS to PaaS).

In the public space, the big 3 (Amazon, Microsoft and Google) take the top spot. The Amazon AWS being a pure-play IaaS is far ahead in the game due to the lead-time they got starting early on and the exceptional catalogue and services they provide; they surely have the cloud to beat. The Microsoft Azure is the biggest PaaS player with hands into the IaaS as well; the well-integrated Microsoft development ecosystems and huge Microsoft community offers them great benefits in terms of acceptability; they are the developers’ delight. The Google Compute/App Engine is going to be highly focused on the IaaS side early on and side-by-side polishing their PaaS side; they are the provider to watch because they are pretty hands on and experts on scale.

The level to which AWS and Azure have penetrated and adapted to the customer behavior gives them huge edge. Google, starting afresh and best practices already laid out from the well accepted AWS and Azure, is surely going to be a prime contender. All have pretty flexible cost structures with the Bulk Usage plans / Startup plans / Free limited developer access plans. Also, the availability and uptime guarantee SLAs are also pretty comparable and similar. Generally, the PaaS model introduces the cloud lock-in (but offering a well-integrated developer experience for Rapid Application and Solution Development) and the IaaS allows for cloud portability to an extent (of course bringing in some amount of extra overhead of creating a platform), the selection pretty much depends on the end customers’ priorities.

The private cloud usage has increased significantly from 2013 to 2014, not at the cost of public cloud usage though, but there is a clear segment, which demands security and performance over the theoretically infinite pool of resources on the public cloud. The key contributors to the private space are vmware and OpenStack (open-source) with the latter giving tough time to the long-time leader vmware. Being an open source, OpenStack does bring in some amount of integration and support overheads but the OpenStack community is pretty mature and responsive.

To utilize the in-house security and performance along with the infinity from public cloud, the hybrid clouds have been gaining a huge traction. An in-house private cloud co-operating and well integrated with the public cloud is a well desirable use case. The AWS and Microsoft have already made headway into the hybrid cloud space with the introduction of Direct-Connect and ExpressRoute respectively. These services enable the PRIVATE connections between the datacenters and the in-house infrastructure (bypassing the public internet) offering reliability, performance and high security.

As a note, a multi-cloud strategy is the one best suited for more resilient large-scale solutions. Big players have seen the downtimes, which have affected the customers badly.

What’s your view about Google’s recent massive price drop for its Cloud platform? Is that likely to alter the Cloud landscape (now or in future)?

The Amazon AWS did respond by slashing the prices of its compute and storage services, I think the very next day. Google has all the ammunition to be innovative and creative to break into the public cloud game. On the other hand, Amazon has a huge customer base which can back it through and through.

It’ll be interesting to see how they get more creative and innovative at managing their data centers and virtualization strategies on one hand and play with their profit margins on the other hand to maximize their market share. In my opinion it is going to be an ever-lasting battle with the end customers gaining big time. It is all about who has the best supply chain efficiency and best approach towards managing the costs of maintaining the data centers.

What selection criteria do you think customers (IT Decision Makers at companies) typically consider while choosing a particular Cloud company. Do these differ by size/ type of the company and/ or by specific workload being considered? Who are the typical people/ titles involved in the decision-making?

This may sound like a digression but will converge later. For the business critical solutions (which almost every solution is in current cut-throat market) a multi cloud and hybrid cloud strategy is absolutely a must from continuity perspective. There have been bad times for all of the providers and they leave the customers scarred big time. Even well within the availability SLAs of three, four or even five 9s, what customers need to realize is that downtime is a reality and Murphy’s Law applies equally everywhere. In one of my solutions with one of the big cloud providers, there was a downtime of around 2 hours just before the press release and in my early days, I wasn’t mature enough to have thought around this with many more other mistakes (delivery next month they had said). Very important…

This brings in working out deployments across multiple clouds. If not from the entire solution availability perspective but designing a neat failover mechanism to a feature degraded backup deployment on another cloud are all what the Architects need to think through. I still am not on to your question but bear with me…This brings the cloud portability perspective into the game i.e. to design the solutions in a cloud agnostic fashion to avoid maintainability and sustainability issues.

This is an important point and once you have your tradeoffs and priorities in place, identifying the providers best suited for your kind of solution can be figured out. For an instance, HP and Rackspace are OpenStack based public cloud providers and are working big time to beef up their PaaS aspects. Having a single solution based on the OpenStack platform components gives you all the positives of PaaS cloud and you get cloud portability out of the box. You can surely do this using a third party platform component and use it over and above IaaS clouds (AWS and Microsoft IaaS) but really depends on your cloud strategy.

Moving on, you definitely need to think through the other instruments based on the categories: Compute, Storage, Network, Cross-Service, Support, SLA, Management, Billing/Metering requirements, and how the cloud providers you have shortlisted map to your long-term roadmap.

In some of the cases the customer has a key role to play in selecting a particular cloud provider. The existing customer partnerships and relations drive it in considerable number of instances but surely a thorough investigation and thorough analysis by the participating Architects in complete guidance from the CTO is done before really embracing a particular or a group of providers.

What role does Open Source play in Cloud related decisions for companies? Do you foresee the importance of Open Source increasing or decreasing in future? How?

The open source and community driven initiatives are taking a key role in driving the market. The OpenStack, Cloud Stack and Eucalyptus are the key providers. The OpenStack in particular which is backed by companies like HP, Rackspace, IBM, Dell, AT&T, Ubuntu, RedHat, Nebula as platinum members and the other supporting companies (in 100s) with more than 17000 developers contributing from across 140 countries, is getting embraced full heartedly.

The public clouds for the companies like HP are implemented using OpenStack with products like Helion giving a well-integrated one click deployable private cloud installer are getting amazing traction. I have worked on OpenStack Trove project myself and the experience has been amazing so far. I was amazed by the maturity of the community and the processes for the continuous development and integration, especially which is driven by developers around different countries.

The benefits from end customer perspective, they are community driven and have key players backing them. The other important benefit is the cloud agnostic and cloud portability strategy you embrace out of the box when you work on an OpenStack based cloud (public or private). For an instance, imagine you deciding on Rackspace as the base cloud and then don’t really have to make breaking changes to have a multi cloud strategy implemented with HP as the other provider. Both based on OpenStack, no issues on maintainability. Secondly, if you decide with a private/hybrid cloud strategy down the line for some of your secure on-premise deployments, you bring a private OpenStack cloud into your on-premise cloud ecosystem and the solution is in place from day one.

The importance will surely increase for some time in the near future but the biggies are catching up. As mentioned earlier, the solutions like ExpressRoute and Direct-Connect may turn out to be the private cloud killers and open source based solutions right now play heavily on the private cloud side only. The public clouds based on open source are there but its niche so far has been the private cloud. It’ll be interesting to see the amalgamation of an open source private cloud connected with a proprietary public cloud using the private connections.

What are the key challenges that companies are facing to embrace the Cloud technologies? Will security always be an obstacle for the growth of cloud services in? Are these challenges unique for large vs. small companies?

The cloud providers are dealing with a completely unique kind of challenges and are trying to be innovative. Their problems span from, how to maintain their data centers, which virtualization technologies to use for better performance and isolation, how to provide the 100 percent of availability across their data centers, how to keep the developers/businesses interested in them, how to stay abreast on the latest technology trends and how to make them available as service son their particular cloud (for PaaS players majorly), how to provide the integration points so that their cloud solution fits into the enterprises seamlessly.

The cloud business users are worried about uptime, availability, performance and security challenges for their business critical applications, which are cloud targeted. They demand from the technology specialists or the application architects and developers the solutions around these very basic challenges. For the cloud technology brigade, the biggest challenge is around thinking an infinitely decoupled solution and lay it over the thought process of cloud portability. As a very basic example, majority of the developer community thinks of the public cloud as an infinite resource pool but they need to realize that it comes with a cost and which is pay-by-use and they still need to max out on the resources they are already using than depend purely on the scaling features. The challenge is around spreading a cloud centric thought process and devising strategies and patterns around them.

Security is just one aspect and surely the most important one. The private and hybrid cloud strategies have solved it to an extent. As mentioned earlier, with the private connections from on-premise infrastructure pool (whether private cloud or not) and the public clouds, the security issues are getting well taken care of. But if the solutions are not architected with a cloud centric, multi/hybrid cloud strategy with immense focus on cost utilization and cloud portability, all these advancements are still not going to solve the challenges.

What’s your view on the future of the Cloud (next 5-10 years)? (e.g. – in terms of competition, new business models, technology, mobile, etc.?)

It is bound to go the way other consumer software has gone. Microsoft Office was available on OSX and now on the iOS. Is Apple going to be worried about the usage of Pages, Numbers, Keynote etc. getting hit by that, absolutely not as this is what consumers want from an iOS device from usability and coherence perspective.

How about taking the multi-cloud solution decisions from the end consumers and providing it as a cloud platform capability. The consumer going to a particular cloud and able to select which all other cloud providers s/he wants to use for their application deployment. How it turns out to be in reality is beyond the scope of imagination and millions of baby steps may be needed for providing the customer, such an amazing experience.

For the recent future, in my opinion it’ll be about the hybrid/multi cloud strategies and the features the big cloud providers give around them. This brings in the cloud brokering products into the picture and there importance increases with the hybrid/multi cloud strategies being realized as the best options. Competition wise, Google is definitely going to change the way the cloud operates right now and is bound to bring in some innovative options for the end users. The open source is going to be running at pace for the times to come and is definitely going to play and important role in the overarching hybrid cloud strategy.

It definitely is the time to think about the cloud-portable PaaS and SaaS aspects for the IaaS centric providers because the rapid application development needs are going to drive the usage and acceptability in the times to come. Some of the IaaS providers have already started beefing up their platform capabilities and are investing heavily on the PaaS side. The private cloud usage will suffer because of the products around private connectivity of on-premise infrastructure with the public cloud data centers but it still is at early stages with the prime questions around how it is going to operate across the data centers, which are distributed worldwide.

Big data Analytics is another key area, which is going to play a huge role in the consumer and enterprise technology landscape. Its provisioning and functioning well within the cloud platform, as a managed service has also been a key area where the cloud providers are building capabilities on.

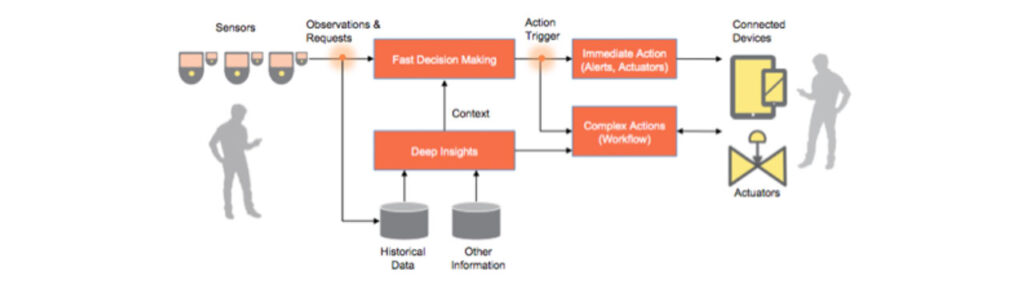

The mix of the Big-Data analytics (the ongoing use cases and the emerging one from the Internet-Of- Things) and cloud is already getting and will continue getting huge traction over the next 5-10 years.