What is an Architect?

For a software company, or a traditional enterprise going through digital transformation, there is no more important hire than your chief product software architect. People mean different things when they call themselves an "architect". This is not, generally, an attempt to frustrate or confuse you. Instead it's caused by increasing specialization, and also by the 0gradual convergence of the "IT", "system integration" and "product development" realms in the context of digital businesses. However, it will generally be disastrous if you put the wrong type of "architect" in the wrong role. The skills and mindsets are generally not transferable. Generally speaking, a:

- "Systems architect" or "network architect" is someone who knows how to set up hardware or cloud systems for maximum performance, reliability and security. This is often called the "physical architecture" or sometimes the "IT architecture" since it concerns itself primarily with what software capabilities are deployed where, and how they connect. This type of activity requires some coding, but primarily in the area of deployment automation, system monitoring and other physical deployment configuration work. Companies that are evolving from an IT-focus to a more "software product" focus generally have this type of architect already on staff.

- A "solutions architect" generally comes from the systems integration world. He or she tends to be expert at selecting off-the-shelf packaged software, at configuring these packages, and at implementing interfaces between systems. He or she will generally not be focused on creating new software systems "from scratch", but will rather create them by combining pre-existing purchased systems with some home-built systems. Frequently this person will have coding skills, often in scripting languages, used to tie existing systems together, transform and manage data, and do similar tasks.

In this blog we focus on yet another type of architect, the "software product" architect. This is the person who can listen to your requirements, and drive the creation of your system "from scratch"-- that is, to develop your unique IP through writing software code in Java, C#, .Net and many other languages, rather than buying and configuring commercial off-the-shelf systems.

Software product architects are frequently rather eccentric people. For example, one of the best architects I ever worked with drove a dirty, beat-up 20-year-old car despite making a multiple six-figure (USD) annual salary. He was constantly getting lost, even when he had been to the same location multiple times. Another architect was constantly late for meetings. Even if his previous meeting ended on time and was literally in the same building in the room next door, he'd still somehow manage to be 20 minutes late. Yet a third excellent architect was so paranoid that I honestly would not have been surprised to see him come to work in a tin-foil hat to protect his brain from alien radio signals.

Eccentricity is not a job requirement, and some excellent software product architects actually seem quite normal. But don't let eccentricity dissuade you from hiring someone who is otherwise great. Eccentricity does more-or-less come with the territory.

Architects also tend to be highly independent thinkers. They like to figure things out for themselves, and they aren't bothered a bit if no one agrees with them. Some like to argue, others prefer to be quietly confident, but all good architects tend to be very sure of their work. The best ones can explain their perspective so well that they end up convincing you and everyone else that their approach is the right one--and it is.

How Do You Interview for an Architect?

So how do you hire a great software product architect when, almost by definition, you are looking for someone at least as smart or maybe even smarter than you and your very best people; a person who knows things that you and your team don't already know? Obviously, you'll want to check your candidate's technical credentials as well as you possibly can--ideally by getting people you trust to do "skills interviews" on technical areas to weed any "con artists" out of the system. But technical skills alone do not assure you of a good hire as an architect. Here's an approach that has worked well for me:

- When I'm interviewing an architect candidate, as soon as it is polite I ask him or her to describe a system they designed. For a junior candidate, I ask about one they were involved in, or a commercial system whose architecture they know about and admire. I might ask a senior architect to describe the system they are proudest of, the one they most recently worked on (if they can do that while still respecting confidentiality), or just a system that strikes me as interesting, based on their past CV. If they start to answer verbally, I ask them to go to the whiteboard and sketch it out for me.

- A good architect's natural habitat is the whiteboard. Good architects are never more at home than they are drawing technical diagrams on whiteboards, and explaining, discussing and debating those diagrams with others. Architects tend to be introverts and sometimes even loaners--but I've yet to work with a good one who doesn't come alive at the whiteboard in a lively discussion. Notice their body language in addition to their explanations--does it look like they are totally relaxed and in their element at the whiteboard explaining things to someone? Are they animated and excited about the system they are describing? Is their excitement about the system contagious--that is, are you starting to feel excited about it too, at least a little bit? If so, they may be a good architect; if not, they probably aren't. This is a crucial test.

- Listen to the candidate's description of their previous system. Even if you know nothing whatsoever about the business domain or the technology they are describing, if the candidate is good, both will start to become clear to you as they describe it. Good architects make things understandable--and often interesting. They will be able to explain things--even very complex technical things--in a way that makes them comprehensible, while giving you new insights. This is regardless of your own level of understanding, high or low. A good architect should be able to clearly describe a system to an 80+ year old grandparent, to a business-savvy but non-technical CEO, or to the CTO, in ways they can all understand. A good architect adjusts his or her explanation to his audience--an essential skill.

- The best architects make complex subjects sound simple. On the other hand, mediocre and bad architects survive in their careers by making simple subjects sound complex. When someone is throwing obscure TLA's ("Three-Letter Acronyms") at you, it's easy to be intimidated and back off. Don't. Push until you either understand the system they are describing, or realize they are just throwing acronyms at you to confuse you.

- Don't confuse confidence with arrogance. All good architects (and many bad ones) are self-confident about their work. They may or may not be confident about themselves personally, but they are rock-solid when it comes to their belief in the value of their work. Good architects are not indecisive. I often test this by asking challenging questions, like "Why did you do it this way?", or "I've seen other people do it that way--did you consider that? If you did, why did you reject it?" A good architect will answer these questions easily, without getting upset. He or she will have thought through all the variations you are likely to come up with and come to an answer that they genuinely think is the best. If they appear uncertain when challenged, unless they are very junior, that is not a good sign. A good senior architect may (or may not) be diplomatic, but they will tell you why their way is better--unless you really do come up with an idea they didn't think of. In that case, the best architects will generally consider your idea thoughtfully and very quickly be able to give you an alternative that incorporates it, right there at the whiteboard. You'll know a good potential partner when you see this happen.

- I find arrogance and defensiveness often go hand-in-hand. When you question, or challenge some aspect of their architecture (which I suggest you do--even if it's just to ask for more detail) and your candidate gets emotional or defensive, that's a bad sign. If, subtly or more overtly, he or she tries to make you feel like it's your problem that you don't understand why their approach is better, then that's a very big danger sign. And if they condescend to you or are in any way insulting, I wouldn't hire them.

- You and your team will depend on this person in a vital way if you bring them on-board. Note your own feelings when interacting with them--and don't discount those feelings. You as a human being are a finely tuned instrument for picking up non-verbal cues. Our responses to these cues manifest themselves, often, as emotions. If you catch yourself feeling dumb, insulted, intimidated / "put down" or otherwise negatively affected during the interview, it could well be that this person has developed an interaction strategy to keep people from questioning him or her by making them feel negative feelings ("I'm dumb") if they do. If a person cannot accept constructive input and questions, or function as a respectful peer or subordinate, this does not lead to successful outcomes no matter how brilliant that person may or may not be. Don't hire them. That being said, examine yourself to see if your reaction is your own issue. If you frequently feel these emotions, I would not necessarily attribute them to this candidate. I would also suggest it doesn't really matter if you "like" this person or not, provided you can work with them. I tend to like the architects I work with, but they are an eccentric group and you may not! Don't let that stop you. In sum, use your feelings as a barometer: it gives you valuable feedback about the candidates’ communication style, and also lets you know if this is someone you feel good about working with.

- I always ask an architecture candidate how much code they still write. The best architects generally still do some degree of coding from time-to-time; and all of the best architects know how to code and can do it extremely well when they need to. My perspective is that unless an architect can code, they are not a good architect--because in that case everything they do is all theory. On the other hand, it is almost always the case that good architects don't currently code a lot. That's because they are generally one of the highest-paid technical employees in the group--probably the highest--so their time is too valuable to have them spend it coding very often. Good architects do spend time coding with developers when they get stuck, developing examples, doing code reviews and giving inputs or updates. Some may end up writing tricky algorithms--or coding for fun in their spare time. The key thing is that they should know how to write code and code hands-on at least some of the time.

What are the Traits of a Good Architect?

To be a good architect, you really do have to be very smart. This means that an architect often is, in fact, the "biggest brain in the room". However, if he or she manifests that by making everyone around them feel stupid, the relationship is not going to work. The best architects are humble in a genuine way, and respect what the people around them bring to the table.

Do such paragons exist? Yes, they really do. But the combination of high intelligence, technical knowledge and soft-skills that go into making a first-rate architect is rare. Here are some things I would trade off / sacrifice to get the positive traits mentioned above.

- Provided he or she had the technical skills and the soft skills, I would not insist that a software product architect be an expert in my business domain. For example, if I worked in retail, I would not insist that my software product architect be an expert in retail when I hired him or her. This may seem surprising. If I were hiring a "solutions" or "system integration" architect, then I would insist they know my domain because their primary job is domain-dependent package selection and integration. Not so for a software product architect.

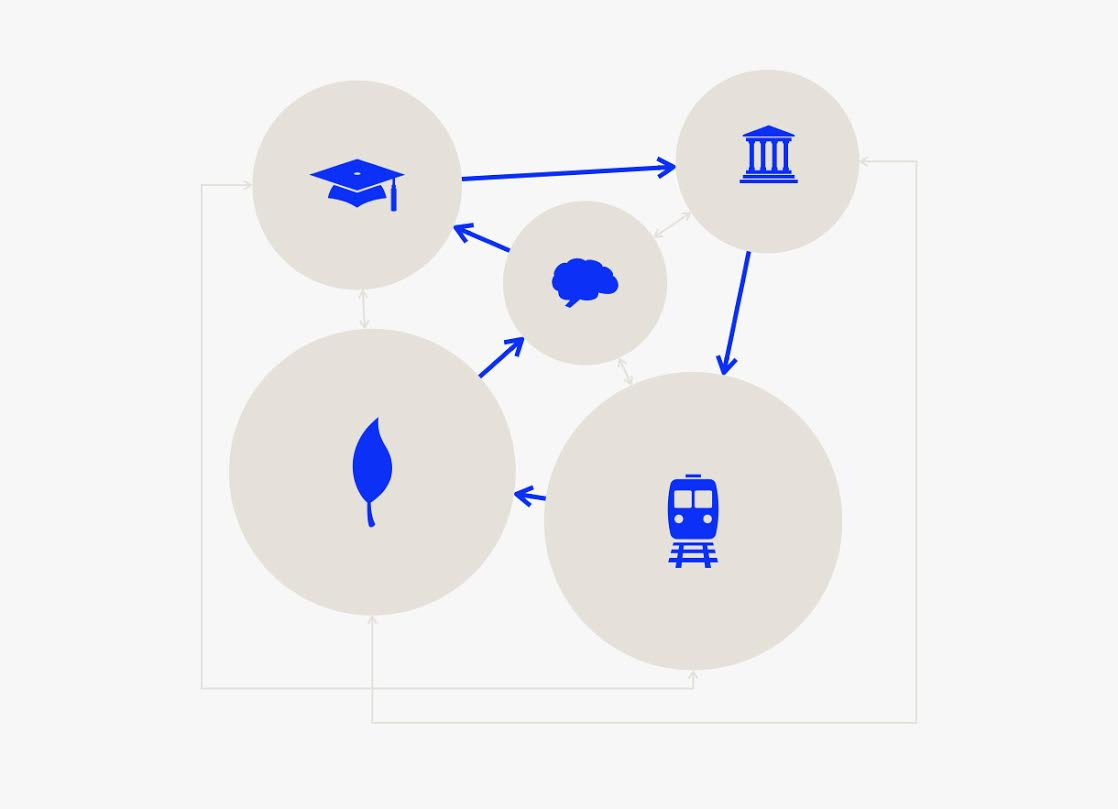

- A software product architect should be an expert in designing and developing software products--that is SaaS systems, platforms, applications and so on. A software product architect is the kind of architect who creates packaged software in the first place. The ability to create a packaged solution "from scratch" is a very different skill set than ability to select the right one for your business. A software product architect needs to understand first and foremost how to build first-rate software systems. Perhaps surprisingly, these design skills tend to be transferable from business domain to domain. If you pick the right person, they will have the brains to pick up what they need to know about your domain in a very short period of time.

- I would not insist that an architect candidate be completely current on coding, languages and technologies. Some architects code a lot. Others, however, work primarily in a technical design and technical supervision role. I do think it's essential that architects know how to code, and that they spend at least some of their time--even if it's just a few hours every week or so--actually doing it. However, ironically, your junior engineers may be more up to speed on the latest UI packages or some specific technologies than your architect. If your most junior engineer finds that your architecture candidate has a surprising gap in his or her knowledge of the details of a particular open-source package, that is not in itself a problem. Architects operate at a completely different level of abstraction than coders. An Architect's basic job is to take your business requirements, and determine how to embody those in software. Like a physical home or office building architect, software product architects worry about things like flow, cohesion, maintainability, structure and other big-picture issues. Even the best software architects may not be up-to-the-minute on the details of a particular package or technology, other than to know when it is useful. The lack of detailed technology knowledge in a particular area is not a negative for an architect.

- Good architects tend to be confident (but not arrogant), and to prefer to figure things out by themselves rather than to be told what to do. This leads to some very interesting dynamics at times, that can make even great architects challenging and frustrating to manage. Still, when you check references and talk to their former bosses, I'd look to see whether those bosses believe that, on the whole, your candidate's merits far outweighed their eccentricities. I would not be put off by the fact that these frustrations and eccentricities exist--provided they are not too extreme. A great architect will create so much value for you and your company that I think it is very much worth the effort to get around their foibles and eccentricities.

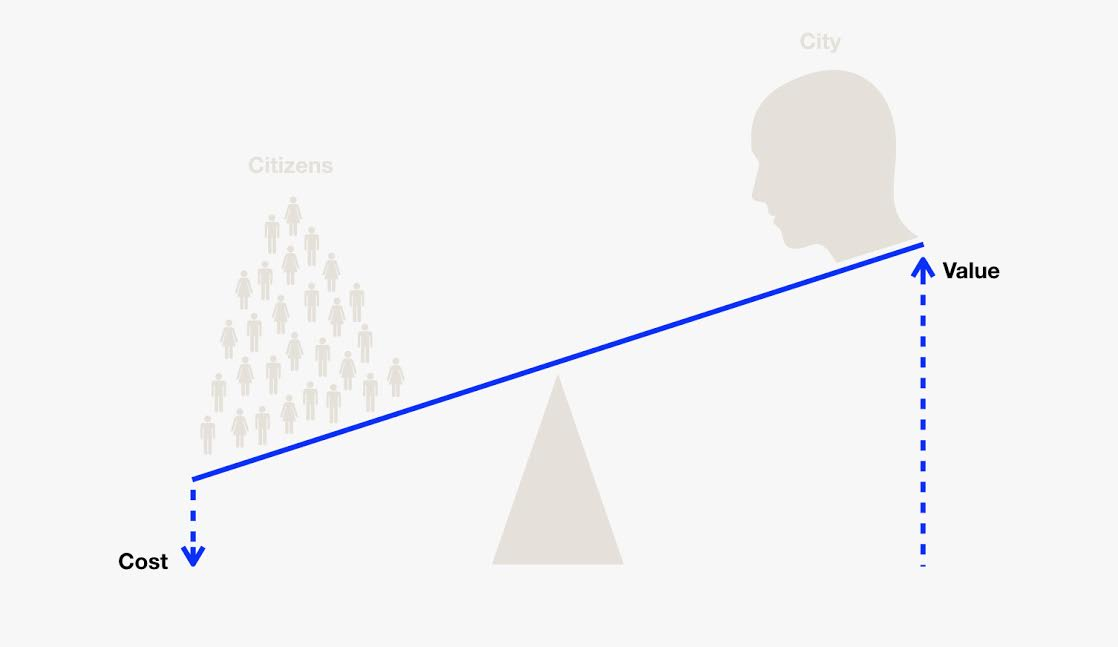

Unless you get incredibly lucky--which happens, but who likes to count on that--the success or failure of your software business or "digitally transforming" company will be heavily impacted by your software product architecture. A good software architecture is what allows your company to rapidly pivot and evolve; to minimize maintenance and operations cost; to scale robustly and smoothly to millions of users; to deliver value to your end users, and to bring revenue to your business. Your software product architect is the key hire to get those benefits. It is very much worth the time and trouble to find the right one--and to work effectively with him or her once they are on-board.

Sensorama “Experience Theatre” from 1962

Sensorama “Experience Theatre” from 1962

Figure 1. Intel GO Automotive 5G Platform in the trunk of a BMW 740i

Figure 1. Intel GO Automotive 5G Platform in the trunk of a BMW 740i Figure 2. 5G remote driving concept

Figure 2. 5G remote driving concept Figure 3. IoT Safety Jacket

Figure 3. IoT Safety Jacket Figure 4. IoT Smart Pet Tracking (right) and Dog’s Profile (left)

Figure 4. IoT Smart Pet Tracking (right) and Dog’s Profile (left)