- Services

Technology Capabilities

Technology Capabilities- Product Strategy & Experience DesignDefine software-driven value chains, create purposeful interactions, and develop new segments and offerings

- Digital Business TransformationAdvance your digital transformation journey.

- Intelligence EngineeringLeverage data and AI to transform products, operations, and outcomes.

- Software Product EngineeringCreate high-value products faster with AI-powered and human-driven engineering.

- Technology ModernizationTackle technology modernization with approaches that reduce risk and maximize impact.

- Embedded Engineering & IT/OT TransformationDevelop embedded software and hardware. Build IoT and IT/OT solutions.

- Industries

- GlobalLogic VelocityAI

- Insights

BlogsMarch 15, 2023GlobalLogicIf You Build Products, You Should Be Using Digital Twins

Digital twin technology is one of the fastest growing concepts of Industry 4.0. In the ...

BlogsJanuary 25, 2023Adam Divall

BlogsJanuary 25, 2023Adam DivallDeploying a Landing Zone with AWS Control Tower – Part 3

In this post, we’re going to walkthrough some of the remaining post configuration tasks...

- About

Press ReleaseGlobalLogicSeptember 2, 2024AI adviser speaks the language of the machine

“AI should remain subservient to human needs,” says AI expert Dr Maria Aret...

Press ReleaseGlobalLogicNovember 6, 2017

Press ReleaseGlobalLogicNovember 6, 2017ADEPT Hospital Solution Honored as Innovation Finalist

Medical solutions provider Applied Science, Inc. (ASI), digital product development ser...

- Careers

We have 60+ product engineering centers

Engineering Impact

GlobalLogic, a Hitachi Group Company, is a trusted digital engineering partner to the world’s largest and most forward-thinking companies.

GlobalLogic, a Hitachi Group Company, is a trusted digital engineering partner to the world’s largest and most forward-thinking companies.Today, we help transform businesses and refine industries through intelligent products, platforms, and services.

Since 2000, we’ve been at the forefront of the digital revolution—helping create some of the most innovative and widely used digital products and experiences.

Learn more about what sets us apart0product engineering centers0active clients0+Professionals in 26 countries0+product releases per yearWhat we offerExplore our services

Unlock the power of data, design, and engineering to fuel innovation and drive meaningful outcomes for your business.

Learn MoreProduct Strategy & Experience Design

Design and build what’s next with help from Method, a GlobalLogic company.

Learn MoreDigital Business Transformation

Advance your digital transformation journey.

Learn MoreIntelligence Engineering

Leverage data and AI to transform products, operations, and outcomes.

Learn MoreSoftware Product Engineering

Create high-value products faster with AI-powered and human-driven engineering.

Learn MoreTechnology Modernization

Tackle technology modernization with approaches that reduce risk and maximize impact.

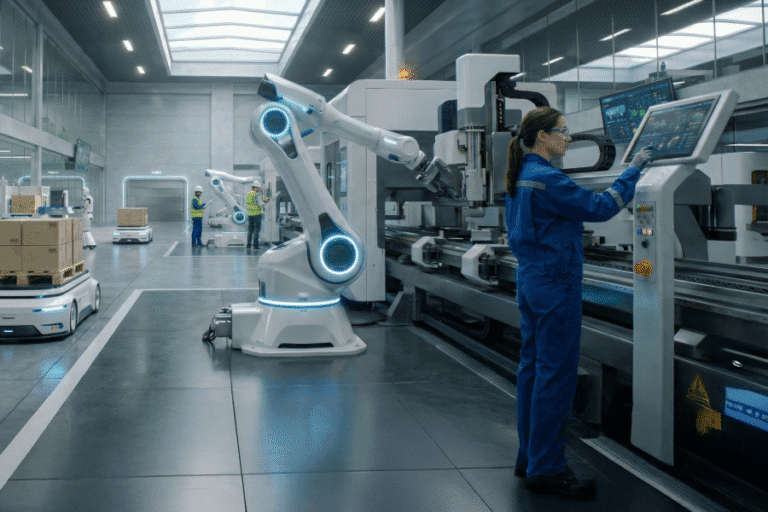

Learn MoreEmbedded Engineering & IT/OT Transformation

Develop embedded software and hardware. Build IoT and IT/OT solutions.

Our Case StudiesDiscover how we’re engineering impact with clients around the world

We work with the world’s largest and most innovative companies—forging deep collaborations to create intelligent products, platforms, and services.

View all case studiesWe have a profound impact on everyday life

Every day, billions of people connect with products, platforms, and services that we helped design and engineer.

MakeYourImpactFeatured insights

Explore fresh thinking from some of GlobalLogic’s strategists and engineers

See allBlogs12 February 2026The 6G Revolution: Architecting the Internet of Everything

Embedded Software, Hardware and Silicon SolutionsEnd-to-End IoT SolutionsPhysical AICommunications and Network Providers BlogsGlobalLogic13 January 2026

BlogsGlobalLogic13 January 2026AI-Native Networks: Engineering the Future of Intelligent Telecom

As the telecom industry redefines its role, two prominent industry leaders …

Agentic AICloud Platforms – HyperscalersEnterprise AICommunications and Network Providers BlogsGlobalLogic18 December 2025

BlogsGlobalLogic18 December 2025Physical AI: Bringing Intelligence to the Edge of Action

At GlobalLogic, we’re building systems that don’t just observe the physical …

Agentic AIPhysical AIVelocityAICross-Industry Get in touch

Get in touchLet’s start engineering impact together.

GlobalLogic provides unique experience and expertise at the intersection of data, design, and engineering.

Loading...

How can I help you?

How can I help you?

Hi there — how can I assist you today?

Explore our services, industries, career opportunities, and more.

Powered by Gemini. GenAI responses may be inaccurate—please verify. By using this chat, you agree to GlobalLogic's Terms of Service and Privacy Policy.