- Services

Technology Capabilities

Technology Capabilities- Product Strategy & Experience DesignDefine software-driven value chains, create purposeful interactions, and develop new segments and offerings.

- Digital Business TransformationAdvance your digital transformation journey.

- Intelligence EngineeringLeverage data and AI to transform products, operations, and outcomes.

- Software Product EngineeringCreate high-value products faster with AI-powered and human-driven engineering.

- Technology ModernizationTackle technology modernization with approaches that reduce risk and maximize impact.

- Embedded Engineering & IT/OT TransformationDevelop embedded software and hardware. Build IoT and IT/OT solutions.

- Industries

- GlobalLogic VelocityAI

- Insights

BlogsDecember 16, 2024Gene LeybzonAccelerating Digital Transformation with Structured AI Outputs

This code produces the following output that can be imported into the candidate trackin...

BlogsOctober 30, 2024Yuriy Yuzifovich

BlogsOctober 30, 2024Yuriy YuzifovichAccelerating Enterprise Value with AI

Discover how financial services integrations are transforming from standalone offerings...

- About Us

Press ReleaseGlobalLogicMarch 11, 2025GlobalLogic Launches VelocityAI to Harness the Power of AI, ...

VelocityAI combines advanced AI technologies with human expertise, helping businesses r...

Press ReleaseGlobalLogicJanuary 10, 2025

Press ReleaseGlobalLogicJanuary 10, 2025GlobalLogic Announces Leadership Change: Srini Shankar Appointed ...

SANTA CLARA, Calif.–January 10, 2025– GlobalLogic Inc., a Hitachi Group Com...

- Careers

Published on February 23, 2023A Guide to Offline Machine Learning in Healthcare Devices

View all articles Abhishek GedamPrincipal Architect,TechnologyShareRelated Content

Abhishek GedamPrincipal Architect,TechnologyShareRelated Content Natasha Haksar9 April 2025

Natasha Haksar9 April 2025 Andy Eyherabide2 April 2025View All Insights

Andy Eyherabide2 April 2025View All Insights Richard Lett5 March 2025

Richard Lett5 March 2025Let's start engineering impact together

GlobalLogic provides unique experience and expertise at the intersection of data, design, and engineering.

Get in touchAI GovernanceMLOpsHealthcare and Life SciencesArtificial intelligence (AI) and machine learning (ML) have revolutionized how people interact with technology. AI and ML technology have driven innovation and transformed all kinds of activities; from getting stock recommendations to buying your next pair of socks, technology assists us in many ways each day. For example, whenever users unlock their phones there is at least one ML-driven service providing recommendations.AI and ML technology are dramatically changing the healthcare industry, as well. Eventually, companies will use ML technology to solve day-to-day and complex healthcare problems.

In the coming years, ML will be able to enhance the healthcare system by helping patients with long-term health conditions as well as improving the lives of everyone around us. Before this is possible, there are various hurdles to overcome. The reliability of ML and AI results, and patient data privacy are key concerns.

Offline ML (or On-Device ML) is a unique innovation for processing data and can help resolve these issues. In the article, you’ll learn about offline ML, various use cases for offline ML in healthcare devices, conceptual solutions, and architecture considerations to keep in mind for your own innovative products.

What’s the Difference Between Online and Offline ML?

With offline ML, data processing happens locally on a device, based on trained model download on device. Online ML is connected, data processing happens remotely and the model receives a continuous flow of data, often updating as it does so.

Healthcare businesses can incorporate offline ML in various ways. Here are a few examples:

- A microcontroller based on the Internet of Medical Things (IoMT) devices with processing power.

- Mobile client applications; some applications can act as an IoMT devices gateway.

- Patient bedside medical devices.

- Diagnostic devices such as X-ray machines.

- Pathology lab equipment.

- An ecosystem of therapy devices.

Recommended reading: Rise of the New Healthcare Paradigm

The requirements of offline ML are not unique to the healthcare industry and apply to other domains and related applications. However, in healthcare, offline ML is essential due to the following considerations:

Privacy & Data Security

Medical information is incredibly sensitive, and users aren’t usually willing to share their data with external services. This includes protected health information (PHI) and the output from IoMT sensors, medication, or therapy details. Offline ML guarantees that devices keep the user’s data local.

Network Latency

Sometimes, therapy procedures need to change rapidly, e.g. changing neuro-stimulator therapy parameters or an immediate pause in therapy. Offline ML is important because any delay can cause harm to the patient. A network error could affect the patient, as well, which is why an offline solution can be highly beneficial.

Connectivity

There are various locations, in both rural and urban areas, where internet connectivity can be intermittent. At such locations, the ability to support patients and work offline is a beneficial aspect of offline ML.

Cost

Cloud service providers with managed ML services may process charges based on the number of requests made to ML services. Offline ML implementation can help healthcare brands save costs on ML services.

Looking for specific healthcare solutions, products & platforms?

Offline ML Use Cases

Many offline ML healthcare use cases involve IoMT devices, mobile devices, and other healthcare equipment. Here are a few examples of how this technology can be used.

Health Monitoring & Predictive Analysis

Some applications use IoMT or collect user data such as caloric intake, calories burned, sleep, exercise, and idle time to help predict potential lifestyle accommodations or changes. This information involves PHI, which is why using offline ML can help safeguard user information.

Therapy Correction

Patients with specific diseases or health issues generally use special therapy devices. Some of these devices are implanted into the patient and can be self-sufficient by automatically changing therapy parameters in the future. Healthcare brands can use offline ML for these devices to improve their functionality.

Insurance Premiums

Generally, users aren’t comfortable submitting sensitive information such as personal habits or medical history to a server. Insurance companies can use offline ML solutions to help predict insurance premiums without retaining user data on servers.

Recommended reading: Real-time Premium Calculation Using IoMT in Health Insurance [Whitepaper]

Image Analytics

Diagnostic equipment like MRI, X-Ray, and CT scans can utilize offline ML to help assess image quality and accuracy, providing first-hand opinion by analyzing Images locally.

Text Reply Prediction

Healthcare brands now use communication platforms for intra-hospital communication. Offline ML can help predict text for doctors or nurses by analyzing recent communication history. Since this may contain PHI, brands can protect information with offline ML when processing information and predicting potential replies.

An Offline ML Solution Overview

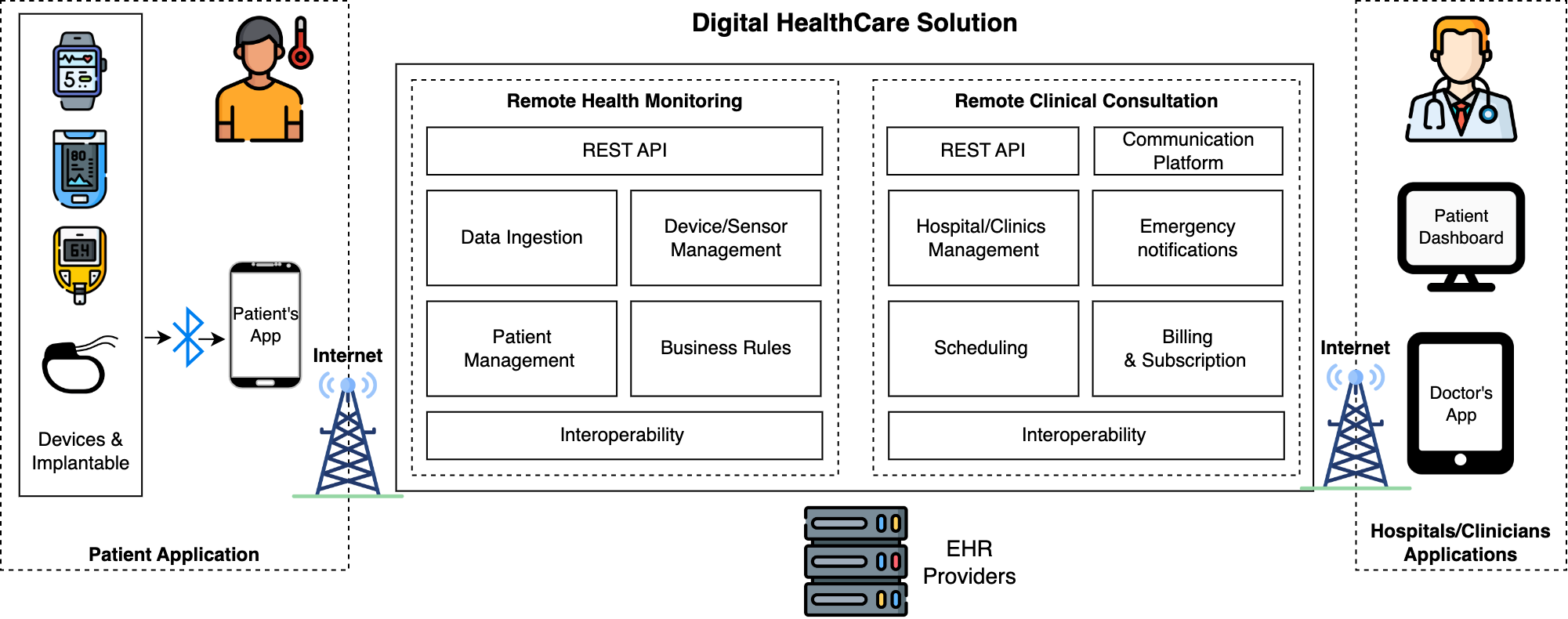

Now, let’s explore a conceptual solution for offline ML in healthcare devices.

As illustrated above, the “Offline ML Module” will be part of a comprehensive solution responsible for processing incoming data and providing results using the ML model. This module will compile data from sources, process data using the ML model, and give results to the business logic, UI, or user action for further processing.

The offline ML module gives independent devices like bedside medical equipment, MRI, CT scan, or mobile application processing power.

Low-powered IoMT devices cannot process data. Therefore, mobile devices can use the Offline ML Module as part of the client application. To get an updated ML model, devices need to connect with a remote server providing the updated model. Medical devices may be low-processing, so companies should reevaluate ML model updating rules often.

Architectural Considerations for Offline ML in Healthcare

Developers must evaluate the specific architectural considerations while designing offline ML solutions for healthcare devices. Keep these in mind, in addition to the application or device-specific architectural considerations.

Trimmed-down Model

Offline ML targets devices with low processing power. This is why building a small, target-specific, trimmed-down trained ML model is crucial.

Remote Updating

Offline ML models may become outdated due to changes in data structure and may need updates. To solve this, developers should have a workflow that updates models remotely.

Recommended reading: If You Build Products, You Should Be Using Digital Twins

Switching Between Offline and Online

Companies can use offline ML for scenarios where internet connectivity is lost, and offline models require a backup. In such cases, developers should consider switching from online to offline ML.

Analytics

Analytics can help developers understand the results of offline ML. However, developers must collect their analytics remotely to analyze user actions and other business parameters.

Compliance

Companies should consider HIPAA compliance if the application handles patient PHI data.

Offline ML: Exploring Solutions

Significant research is underway to provide a framework and tools for running offline ML on mobile and low-power devices. In the meantime, you might like to check out the following available solutions.

Core ML

A machine learning SDK to develop offline ML solutions for iOS-based applications. This provides support for image analytics, NLP, and sound analysis.

ML Kit

Google provides an ML Kit through an SDK for Android and iOS-based applications. It also supports Vision API (face recognition, object tracking, and pose detection) and NLP API (smart reply and entity extraction).

TensorFlow lite

TensorFlow Lite is a library for deploying models on mobile (Android, iOS, Mobile Web) and low-powered devices like microcontrollers. It also provides a complete lifecycle to create custom offline ML solutions.

Recommended reading: Stop Your Machine Learning Quick Wins Becoming a Long-Term Drain on Resources

PyTorch

Like TensorFlow lite, PyTorch also provides libraries and components to build offline ML solutions for Android and iOS. In addition, developers can use the canvas platform to create ML solutions for targeted microcontrollers.

Coral.ai

Coral.ai provides a complete toolkit to perform offline ML on microcontroller-based devices. It also includes support for image segmentation, NLP-based key phrase detection, and speech recognition.

Key Takeaway

Many organizations are focusing on offline ML, and open-source communities are making progress in providing the support needed for mass integration. In healthcare, offline ML will eventually better the lives of the patients they serve.

While medical applications can now run on offline ML mobile devices, it will take time before companies can use them on IoMT and therapy devices. Want to explore the possibilities? Get in touch with GlobalLogic’s healthcare technology team and we can reimagine what’s possible together.

Learn more:

- GlobalLogic Solutions: Partnering with a Leader in Regulated Medical Software Development

- How Digitization Is Changing Medtech, Life Sciences, and Healthcare

- Security Requirements for the Development Team

References

Build beneficial and privacy preserving AI. Coral. Retrieved January 27, 2023, from https://coral.ai/

Cainvas. Retrieved January 27, 2023, from https://cainvas.ai-tech.systems/accounts/login/

Core ML: Integrate machine learning models into your app. Apple Developer Documentation. (n.d.). Retrieved January 27, 2023, from https://developer.apple.com/documentation/coreml

Machine learning for mobile developers. Google. Retrieved January 27, 2023, from https://developers.google.com/ml-kit/

On-device machine learning. Google. Retrieved January 27, 2023, from https://developers.google.com/learn/topics/on-device-ml

PyTorch Mobile: End-to-end workflow from Training to Deployment for iOS and Android mobile devices. PyTorch. Retrieved January 27, 2023, from https://pytorch.org/mobile/home/

TensorFlow Lite: ML for Mobile and edge devices. TensorFlow. Retrieved January 27, 2023, from https://www.tensorflow.org/lite