-

-

-

-

URL copied!

Making fast, context-aware decisions

In my last post in this series, we worked through a real-life example of an “Internet of Things” system, using Uber as a case study. We saw that the key characteristic of an IoT system is its ability to use the observations and requests it receives from sensors and people to make quick, context-aware decisions and then act on them. In this article, we’ll explore the heart of such a system and describe how such quick decision-making can be automated while taking into account the full “context” of the situation.

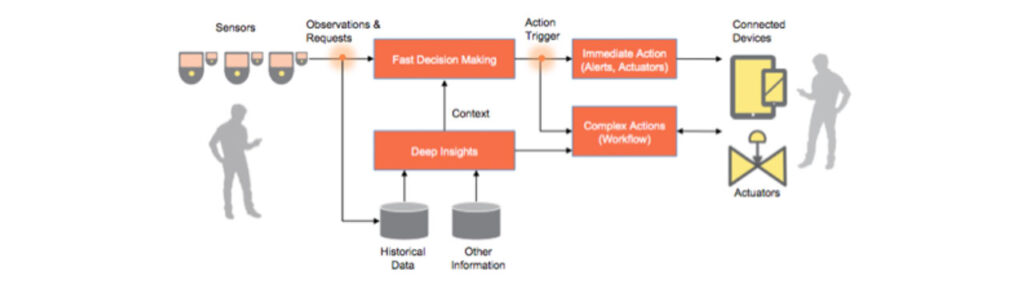

Figure 1: The Heart of the basic IoT System Architecture—Data Analytics

One widely accepted architecture for making fast, context-aware decisions is called the “Lambda Architecture”[1]. This architecture was originally proposed by former Twitter engineer and “Storm”[2] author Nathan Martz in a 2011 blog post[3], and is expanded upon in his book “Big Data”[4].

The concept behind Martz’s Lambda architecture is that there are two layers of analytics involved in rapid decision-making. One, called the “Batch Layer”, uses traditional big-data analytics techniques such as map-reduce to continuously mine multiple data sources for information, relationships and insights. This activity is termed “batch” because—while it may happen frequently—it is generally done on a scheduled basis, rather than on-demand in response to an external request or event. While big data analytic tools and data manipulation capabilities have improved enormously over the last several years, it still frequently takes minutes, tens of minutes, or even hours to run complex analysis tasks over large, heterogeneous data sets. This makes a “batch-oriented” big data analytics approach by itself impractical for rapid decision-making on the scale we need to support the Internet of Things: that is, action taken in tenths of seconds (100’s of milliseconds) or less. While it can be done in other ways, the batch layer corresponds to what we call “Deep Insights” in our basic IoT system architecture (Figure 1).

The second layer in Martz’s Lambda approach is called the “Speed Layer”. This is the layer responsible for rapid decision-making. As any engineer or manager knows, the key to quickly making good decisions is having all the relevant information available when you need it. In the Lambda architecture, the output of the “Batch” layer is continuously processed and the most recent results made available to the speed layer; technically, these are provided in a view. The speed layer uses this pre-processed information whenever a new request comes in, enabling it to make an informed decision, in context, in literally the blink of an eye [5]. While it’s not the only approach, the speed layer corresponds to what we call “Fast Decision Making” in our basic IoT system architecture (Figure 1).

Other techniques besides Lambda are used when even faster results are required; for example, in automated stock trading, or bidding in ad networks. These techniques can deliver decisions on the order of 10’s of milliseconds–with the sacrifice of some versatility. For interacting with humans, however, the Lambda approach gives a good combination of speed and configurability.

As we saw in our Uber example in Part 2 of this series, the decision on which driver and car are best to assign to a particular passenger pick-up request depends on a number of factors. The considerations might include:

- Are the car and driver available? That is, is the driver carrying another passenger right now, or on a break?

- How long will it take the car to get from where it is now to where the passenger is waiting to be picked up?

- Where does the passenger want to go? If they haven’t told us explicitly, what can we guess about where they are likely to go based on past trips, or past trips of other people whose behavior is similar to them?

- If we don’t know and can’t guess where this particular passenger wants to go, what is the most likely destination or travel time of passengers being picked up from that location?

- How good a customer is the passenger requesting a ride? Should he or she be given special treatment? For example, if multiple people are waiting for rides in the same area, should they be picked up out of turn?

- How much revenue does the driver generate for our company? Should this driver be given the pick of the likely best (largest) fares based on our policy?

- Of the possible drivers who are close, how much has each one made in fares so far today? Should we try to even this out, or reward the best drivers?

Again, a reminder that I have no inside knowledge of Uber’s algorithms; the factors Uber actually considers may be totally different from the ones I describe—in fact, they probably are different. However these are representative of the kinds of information a similar service might want to include when assigning a car.

Of the factors mentioned above, some you would probably want to pre-compute in “batch” mode include:

- Based on pre-assigned behavior categories, what is the likely destination of each category based on a given type of pickup location in a given area? For example, an analysis might find a person of behavior category “frequent business traveler” arriving at a pickup location of type “airport away from home” outside of regular business hours is most likely to want to go to a location of type “hotel” in a nearby metropolitan area. When arriving at “home airport” in the same situation, their most likely destination may be “home”.

- Without knowing anything about a particular passenger, what is the most likely destination and/or length of trip for each type of pickup location in a given area? For example, a pickup in a particular shopping area in Manhattan might have the most common drop-off location in another shopping area, while a pickup at an office building may have a different typical destination.

- Best customers (or customer ranking).

- Best drivers (or driver ranking).

- Aggregated total fares paid per driver since their current shift started.

In the lambda architecture, these factors and others would be analyzed by the “Batch Layer” on a scheduled basis (or accumulated and stored as they happen), and made available to the “Speed Layer” for its consideration when a passenger requests a pickup. The schedule of how often to update this information depends on how frequently each factor was likely to change, how costly each is to compute, and how important the up-to-the-minute accuracy of each piece of information is to the operation of the business. Factors that are quick to calculate or that change frequently (like the location of a car) are not computed by the batch layer, but instead are looked up or computed by speed layer at the time a request comes in. As computers and analytics grow ever faster, the line between what can be computed “on the fly” and what is best computed in the batch layer will continue to shift. However the key objective remains to make sure that at the time each decision is made, all the information needed is available to insure the decision supports the goals of the system and organization that deployed it.

A human operator or decision-maker would also, ideally, make decisions in a very similar way to the one we just outlined. Each decision would be aligned with the current, overall goals of the company, and would consider the full context. In the case of a taxi dispatcher, you would expect the very best ones to understand and account for these same factors whenever dispatching a cab. For example, if Mrs. Jones was an important customer—or the owner of the cab company—a human dispatcher would know this and would make sure she had the best experience possible. If Bob was their best driver and a lucrative fare came along, the human dispatcher might send Bob to pick that person up, to encourage his continued loyalty to the company. This type of contextual information is what you learn from experience, and accounting for it is what makes a human decision maker good at his or her job.

The Lambda architecture is one means of letting machines exhibit the same type of “intelligence” that you would hope to see in your best human employees when performing a similar quick decision-making task, consistently and at scale. In reality, of course, the machines are executing a program—more specifically, an analytic and decision-making algorithm—and not exhibiting intelligence in the same sense that a human would. In particular, the machines are not bringing to bear their general knowledge of the way the world works, and applying that general knowledge to a specific business situation. A human, for example, would probably not need to be taught that if Mrs. Jones owns the taxi company, then whenever she calls and urgently requests a cab you give her the one closest to her, even if that means another customer needs to wait a little longer. The human’s general social intelligence and life experience would, hopefully, make that the default behavior.

With the machine algorithm, the actual intelligence needs to come from the people developing the algorithm—both the programmers and the business people who work with them to craft the company goals. This hybrid combination of business, computer science, data analysis and algorithmic knowledge is an emerging field often called “data science”. Because the algorithms behind these decision-making systems directly drive business results—and, in a real sense, are the business—we are no longer talking about conventional IT systems. Conventional systems provide information to human decision-makers, but next-generation IoT systems actually are the decision makers. The staff developing the algorithms behind these systems needs to embody the business acumen and decision-making prowess that was formerly in human hands, and put these into algorithms and systems.

In one sense this is what computer science has always done: to automate formerly human tasks and, to an extent, decision-making. However the scope and impact of decision-making that is now practical and economically feasible based on current technology is a step-change. To address these upcoming business challenges, a whole new level of thinking is required, demanding business-oriented developers, and development-oriented business people. Over time I believe we will see these people become as important to next-generation internet businesses as “quants” have become to Wall Street.

Our goal in this blog has been to show you how the “heart” of an IoT system—its analytics capability—can deliver context-aware decisions in fractions of a second. In our next post in this series, we’ll talk about the technical and economic factors that will make the Internet of Things a practical reality.

Dr. Jim Walsh is CTO at GlobalLogic, where he leads the company’s innovation efforts. With a Ph.D. in Physics and over 30 years of experience in the IT sector, Dr. Walsh is a recognized expert in cutting-edge technologies such as cloud and IoT.

References

[1] http://lambda-architecture.net

[2] http://en.wikipedia.org/wiki/Storm_(event_processor)

[3] http://nathanmarz.com/blog/how-to-beat-the-cap-theorem.html

[4] http://manning.com/marz/

[5] A normal human eye blink takes in the range of 100 to 400ms; http://en.wikipedia.org/wiki/Blink.

Top Insights

Best practices for selecting a software engineering partner

SecurityDigital TransformationDevOpsCloudMediaMy Intro to the Amazing Partnership Between the...

Experience DesignPerspectiveCommunicationsMediaTechnologyAdaptive and Intuitive Design: Disrupting Sports Broadcasting

Experience DesignSecurityMobilityDigital TransformationCloudBig Data & AnalyticsMedia

Let’s Work Together

Related Content

GenAI in Action: Lessons from Industry Leaders on Driving Real ROI

Generative AI (GenAI) has the potential to transform industries, yet many companies are still struggling to move from experimentation to real business impact. The hype is everywhere, but the real challenge is figuring out where AI can drive measurable value—and how to overcome the barriers to adoption. In the latest episode of Hitachi ActionTalks: GenAI, … Continue reading The Internet of Things: Part III →

Learn More

Unlock the Power of the Intelligent Healthcare Ecosystem

Welcome to the future of healthcare The healthcare industry is on the cusp of a revolutionary transformation. As we move beyond digital connectivity and data integration, the next decade will be defined by the emergence of the Intelligent Healthcare Ecosystem. This is more than a technological shift—it's a fundamental change in how we deliver, experience, … Continue reading The Internet of Things: Part III →

Learn More

Share this page:

-

-

-

-

URL copied!