- Services

Technology Capabilities

Technology Capabilities- Product Strategy & Experience DesignDefine software-driven value chains, create purposeful interactions, and develop new segments and offerings

- Digital Business TransformationAdvance your digital transformation journey.

- Intelligence EngineeringLeverage data and AI to transform products, operations, and outcomes.

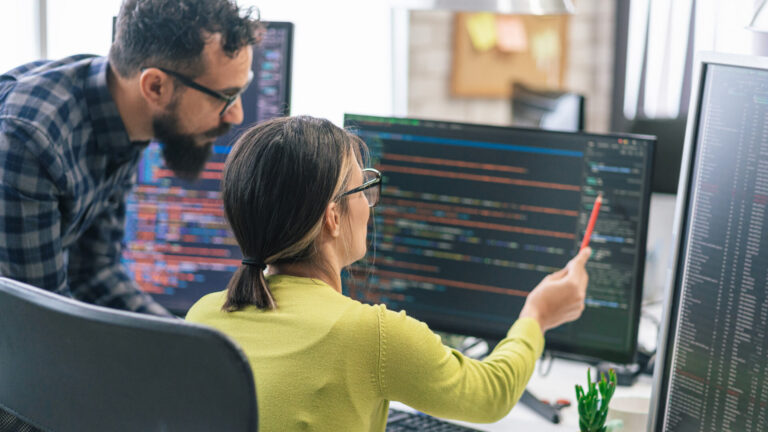

- Software Product EngineeringCreate high-value products faster with AI-powered and human-driven engineering.

- Technology ModernizationTackle technology modernization with approaches that reduce risk and maximize impact.

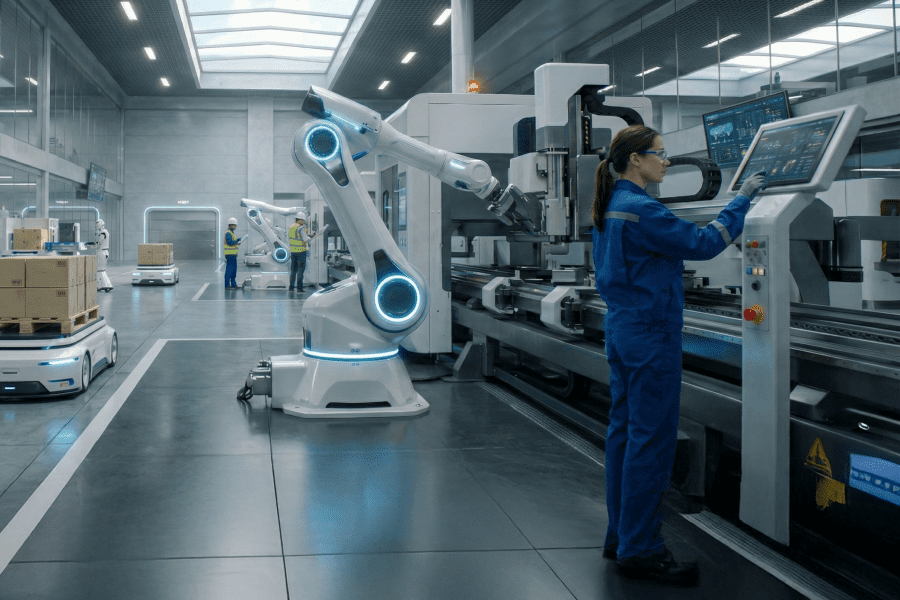

- Embedded Engineering & IT/OT TransformationDevelop embedded software and hardware. Build IoT and IT/OT solutions.

- Industries

- GlobalLogic VelocityAI

- Insights

Case StudiesGlobalLogicFrom Legacy to Leading-edge: A Global Software Leader’s ...

Discover how GlobalLogic’s AI-powered solutions helped a global software leader migrate...

Case StudiesGlobalLogic

Case StudiesGlobalLogicStreamlining API Documentation with GenAI-Enabled Automation

Discover how GlobalLogic helped a leading rental solutions provider automate API docume...

- About

Media CoverageGlobalLogicDecember 22, 2025GlobalLogic sees higher conversion rate of AI PoCs to deployment

In an exclusive coverage with @businessstandard, our Chief Delivery Officer, Ethan Maty...

Media CoverageGlobalLogicDecember 7, 2025

Media CoverageGlobalLogicDecember 7, 2025Hub & Spoke model: GlobalLogic’s expansion strategy into ...

In a recent @ETHRWorld feature, our Talent Acquisition Head, Shuchita Shukla, shares ho...

- Careers

BlogsGlobalLogic22 February 2026If You Build Products, You Should Be Using Digital Twins

Digital twins are the foundation of modern product ...

BlogsBlogsBlogsGlobalLogic18 December 2025Physical AI: Bringing Intelligence to the Edge of Action

At GlobalLogic, we’re building systems that don’t just ...

BlogsBlogsBlogsBlogsBlogsBlogsLoading...

How can I help you?

How can I help you?

Hi there — how can I assist you today?

Explore our services, industries, career opportunities, and more.

Powered by Gemini. GenAI responses may be inaccurate—please verify. By using this chat, you agree to GlobalLogic's Terms of Service and Privacy Policy.