-

-

-

-

URL copied!

Operationalization is one of the buzzwords in the technology industry. Even so, it’s still surprising to see operationalization associated with almost all areas of technology such as AnalyticsOps, AppOps, CloudOps, DevOps, DevSecOps, and DataOps.

Although companies rely on their people and data, creating meaningful data is still a challenge for many companies. Obtaining the right data at the right time can bring tremendous value to any company. Today, most organizations focus on collecting insightful data and consolidating their data infrastructure and operations to create a unified structure and data platformization.

This consolidation is the answer to data centralization. All forms of data have a lifecycle and flow through certain steps before the information becomes usable. As a result, we end up designing a highly scalable data platform with the latest and greatest technology, and company operations run smoothly.

Now, we must consider whether using scalable technological processes and implementing an end-to-end data pipeline is the best possible solution out there. There are specific challenges, apart from functional data pipeline development, which can create customer dissatisfaction and a loss in revenue.

These challenges include:

Growing demand for data.

Today, companies rely heavily on data to generate insights to help make decisions. Companies collect various forms of data from numerous different sources. This data impacts business growth and revenue. However, about 80% of that data is unstructured. Companies can use this unstructured or dark data with technology, artificial intelligence, and machine learning methodologies.

The complexity of data pipelines and scarcity of skilled people.

Data comes from multiple sources. The nature of that data is diverse and complex because there are numerous rules and ways to transform that data throughout the pipeline. To address these complexities, companies are looking for skilled data engineers, data architects, and data scientists who can help build these scalable and efficient pipelines. Finding these qualified individuals is a challenge every company faces to backfill demands and create automated processes.

Too many defects.

Even after rigorous quality checks, the complexity of these data pipelines creates a system full of defects that are then released to production. Once production reports the defects, it takes time to analyze and fix the issue, leading to SLA misses and customer dissatisfaction.

Speed and accuracy of data analytics.

Every company wants efficient and accurate analytics. However, when teams work in silos, it becomes challenging to create effective data pipelines. This is because quality collaboration among operations and data teams must take place to help identify requirements accurately before implementation.

Companies pretty much universally aim to deliver fast, reliable, and cost-effective products to customers while generating revenue. Accurate and reliable data is the force behind this goal, and DataOps is the methodology to build a data ecosystem to help industries capitalize on revenue streams.

What is DataOps?

Gartner’s Definition

“DataOps is a collaborative data management practice focused on improving the communication, integration and automation of data flows between data managers and data consumers across an organization”.

In other words, the goal of DataOps is to optimize the development and execution of the data pipeline. Therefore, DataOps focuses on continuous improvement.

Dimensions of DataOps

DataOps is not an exact science as it works against different dimensions to overcome developmental challenges. However, when DataOps operates on a high level, it can be factored into the following dimensions:

- Agile:

- Short sprints

- Self-organized teams

- Regular retrospection

- Keep customer engaged

- Total Quality Management:

- Continuous monitoring

- Continuous improvement

- DevOps:

- TDD approach

- CI/CD implementation

- Version Control

- Maximize automation

In looking at the different components, it’s clear we need competent teams to implement these dimensions. To create the DataOps processes, we require technical teams of data engineers, data scientists, and data analysts. These teams must collaborate and inegrate their plans with business teams of data stewards, CDOs, product owners, and admins who help define, operate, monitor, and deploy the components that keep business processes running.

Addressing Challenges and Data Monetization Using DataOps

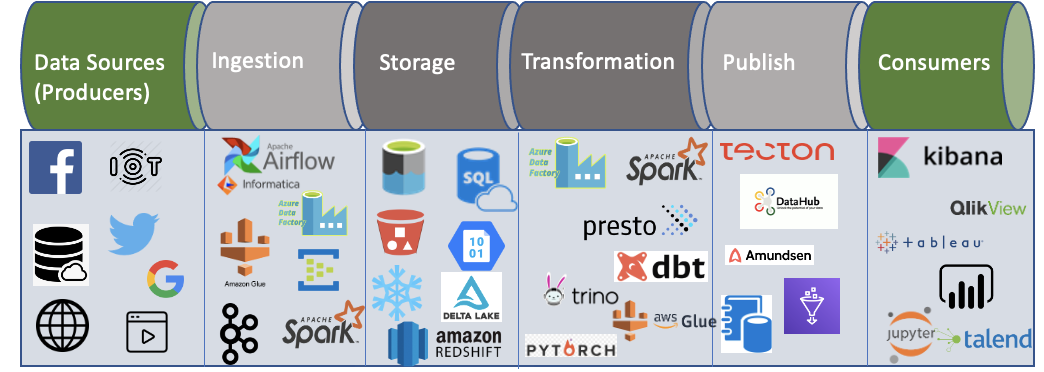

Figure One

In the data platform, the data lifecycle goes through multiple steps. Figure One shows that data comes from different data sources: structured and unstructured data, video, and text. After processing data through the batch or streaming engine, it transforms into meaningful information stored in the data lake or polyglot storage. Then, stored significant data publishes through the consumer layer for the downstream or consuming system.

In this data flow, we usually focus on collecting data while keeping business objectives in mind and we create clean, structured data from transactional systems or warehouses. Generally, companies require this data from the consumer. Still, several questions remain:

- Are we reaping any actual benefits from the required data?

- Are data quality issues reported at the right time?

- Do we have an efficient system that points out problematic areas?

- Are we using the right technology to help monitor and report our system issues effectively?

So how do we address the challenges mentioned above to use the correct information at the right time through DataOps?

To explain this, we’ll use the following example.

Example: Identifying defects in earlier phases of development helps companies monetize their data.

We develop data pipelines focused on business functionality by utilizing the latest cutting-edge technology. When the teams deliver the final product to production, multiple defects arise. When the system reports defects, it takes a significant amount of time to analyze and fix the problems. By the time the teams resolve the problems, the SLAs are over. In many cases, these pipelines are critical and have specific SLAs and constraints.

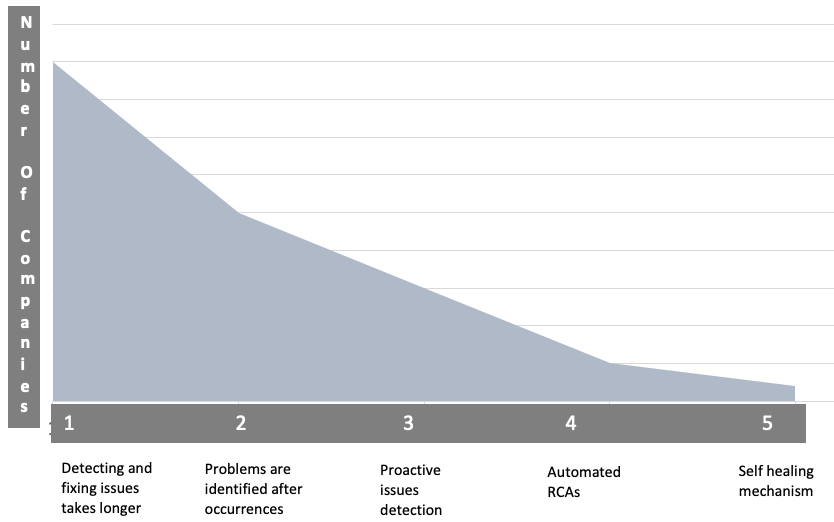

Figure Two

I’ve worked with multiple companies to design their data pipelines. When I distribute data pointers on five maturity models, it appears as pictured in Figure Two.

However, most companies are only at maturity level one or two, where issues are either detected from production or it takes a lot of time to fix those identified issues. In addition, very few companies have matured processes in which they proactively determine issues or create automated RCAs with a self-healing mechanism. Through DataOps processes and methodology, companies can achieve higher maturity levels.

We must add a highly collaborative data and operations team that works in tandem to set the goals and optimize proper processes, technologies, and methodologies to mature our data pipeline. This collaborative process helps to proactively identify slow or problematic data, automatically reports root cause analysis, and operates with self-healing systems. Today, companies rely heavily on artificial intelligence and machine learning technology to automate their bug reporting and for a self-healing system. These systems expedite the overall process to achieve defined SLAs and gain customer satisfaction.

Summary

DataOps is a combination of processes and technologies that automates quality data delivery to improve data value according to business objectives and requirements. It can fasten the data cycle timeline, generate fewer data defects, greater code reuse, and accelerate business operations by creating more efficient and agile processes with timely data insights.

DataOps can increase overall performance through high output, quality, and productivity in SLAs. Proper DataOps processes, governance teams, and technology can help industries capitalize on revenue streams, as well. Today, data is one of the most valuable resources there is, and it is the force for any company’s growth potential.

Top Insights

Best practices for selecting a software engineering partner

SecurityDigital TransformationDevOpsCloudMediaMy Intro to the Amazing Partnership Between the...

Experience DesignPerspectiveCommunicationsMediaTechnologyAdaptive and Intuitive Design: Disrupting Sports Broadcasting

Experience DesignSecurityMobilityDigital TransformationCloudBig Data & AnalyticsMedia

Let’s Work Together

Related Content

GenAI in Action: Lessons from Industry Leaders on Driving Real ROI

Generative AI (GenAI) has the potential to transform industries, yet many companies are still struggling to move from experimentation to real business impact. The hype is everywhere, but the real challenge is figuring out where AI can drive measurable value—and how to overcome the barriers to adoption. In the latest episode of Hitachi ActionTalks: GenAI, … Continue reading DataOps: Money Multiplier for Industries →

Learn More

Unlock the Power of the Intelligent Healthcare Ecosystem

Welcome to the future of healthcare The healthcare industry is on the cusp of a revolutionary transformation. As we move beyond digital connectivity and data integration, the next decade will be defined by the emergence of the Intelligent Healthcare Ecosystem. This is more than a technological shift—it's a fundamental change in how we deliver, experience, … Continue reading DataOps: Money Multiplier for Industries →

Learn More

Power & Utilities – Changing Landscape

Power & utilities industry have gone through a supplied, and utilized, but it also poses threats to unparalleled shift in recent years, fueled by rapid established, legacy business models and regulatory technological advances, rising consumer demands, frameworks.

Learn More

The Chromatic Symphony: Unveiling the Palette of Productivity

We observe how color affects our ability to capture information quickly, laying the groundwork for understanding the importance of color in our environment. The blog concludes by posing intriguing questions about the prevalence of specific colors in corporate branding and its connection to the psychology of color theory.

Learn More

A Lakehouse Implementation using Delta Lake

A data lake is a centralized repository that enables a cost–effective storage of large volumes of data that provides a single source of truth (SOT). However, organizations face numerous challenges when using data lakes built on top of cloud-native storage solutions.

Learn More

Luxury Fashion Industry

This blog is an attempt to understand the difference in approach that a technology partner like GlobalLogic should consider while providing tech solutions to Luxury fashion customers. Let us first understand the Global market, challenges and opportunities for this market segment.

Learn More

Share this page:

-

-

-

-

URL copied!