-

-

-

-

URL copied!

This blog was originally posted by GlobalLogic's experience design arm, Method.

Contents

- Mixed Reality is a new, fundamentally different medium.

- The current, default interaction model is quite simple and limits what we can do with MR

- To improve it, we need to look into natural ways of interacting with the world and do four things:

- Place interface elements on real-world surfaces for tactile feedback.

- Allow for direct manipulation of virtual objects

- Use spatial anchors to expand interfaces beyond the desktop

- Utilize 3D sound to enhance the experience with directional cues

This will allow us to do two amazing things: make us super humans and let us collaborate around virtual objects by adding the human element back into tech.

Intro

Although Virtual Reality headsets, devices, and applications have been around for a few decades now, Mixed Reality?—?a subset of Augmented Reality?—?has only recently matured beyond experimental prototypes. At Method, we’ve looked at natural ways of interacting with it by building prototypes and explored its potential uses outside the usual fun and games.

What is this Mixed reality you speak of?

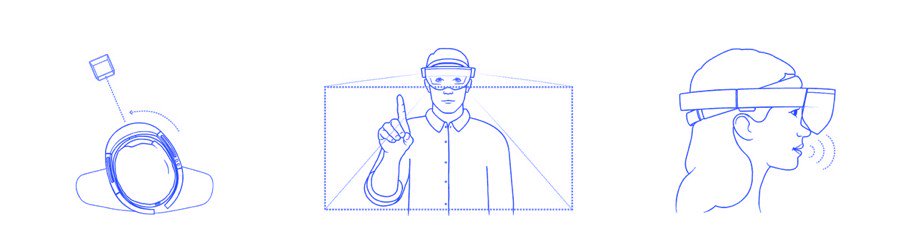

When we talk about Mixed Reality, we most often mention Microsoft’s Hololens, the headset that, unlike VR counterparts, lets you see the world around you as if you were looking through a pair of sunglasses. Hololens overlays virtual objects, or holograms. Since there is no need to record the “real” world with a camera and play it back on a screen (like AR applications on your phone do), there’s no lag or latency in your perception?—?you see what you would normally see, with added objects from the virtual dimension. Apart from 3D scanning the space around you in realtime, having a whole bunch of accelerometers, compasses, and other sensors, it also includes a pair of 3D speakers, that induce the perception of true spatial sound.

What’s wrong with the current interaction model?

The current interaction model is based on an old VR paradigm of using your gaze to move a reticle in the centre of the screen to an object and then using a “pinch” gesture to interact with it. This doesn’t require much work with implementation, but is unnatural and rather unintuitive?—?how many times do we stand still, move our head, and raise our arms midway in the air. It’s also disappointing as a device that scans your gestures, the environment, and knows exactly where in the room you are in real time making the user feel handicapped instead of empowered.

Because MR is fundamentally a new medium augmenting the real world rather than transporting us into a virtual one, we have to look for interaction clues in our perception of the world around us.

Humans of today are more or less the same as humans from 50,000 years ago. We perceive things through a combination of senses , with the most importance usually being given to vision, followed by sound, smell, touch and taste. We interact with the world mostly by touch?—?we press buttons, touch surfaces, rotate knobs, and move objects physically. Touch is closely followed by voice when we say our intents and engage in conversations. More subtly, we use vision to look at something and, sometimes on an unconscious level, people around us turn to see it too.

In our workplaces and homes, we constantly move through functional areas?—?from desks, to workbenches, to pinned walls and kitchen tops.

How do we improve it?

At Method we think there are four ingredients that would make interacting with the virtual dimension a better, more intuitive experience:

- Place interface elements on real-world surfaces for tactile feedback

- Allow for direct manipulation of virtual objects

- Use spatial anchors to expand interfaces beyond the desktop

- Utilize 3D sound to enhance the experience with directional cues

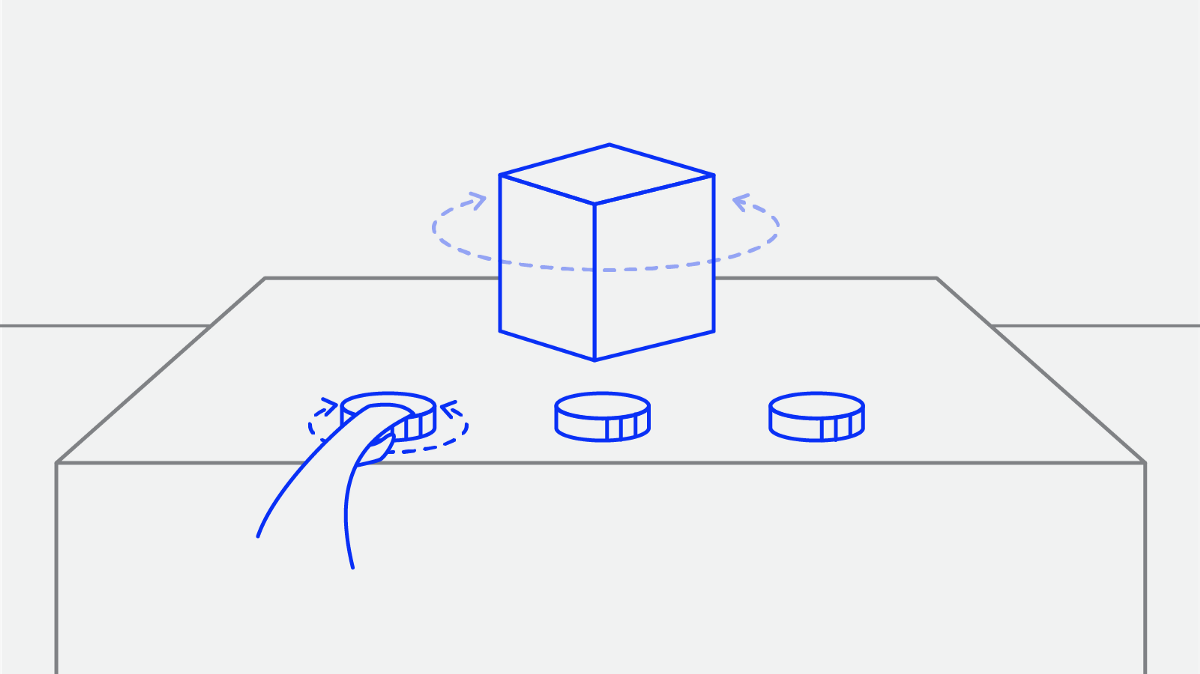

Place interface elements on real-world surfaces for tactile feedback

Placing interface elements on surfaces and real-world objects allows users to virtually interact the way they would with real things. Not only can they see them, but they also feel when they’ve touched them, by using actual physical textures already there. The placement increases intuitive precision and removes the ergonomic stress of having to hold your arm out in mid air (which is still a problem with ultrasound haptic interfaces).

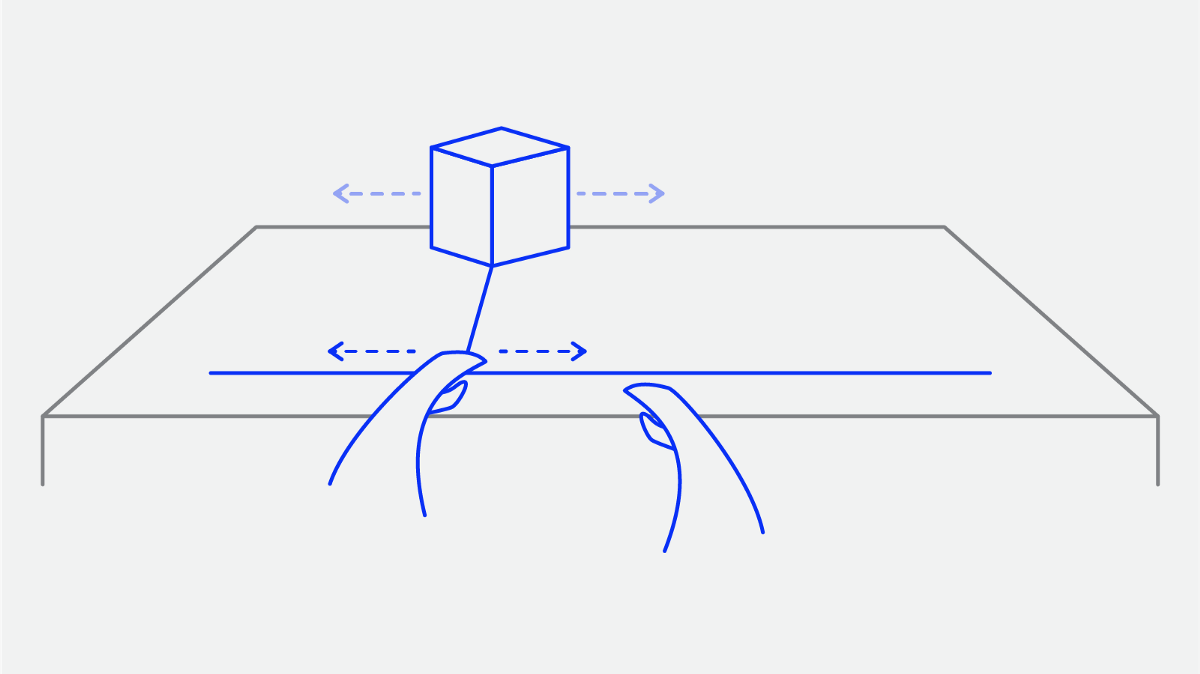

Allow for direct manipulation of virtual objects

Let users manipulate objects directly instead of having to use an interface element, like f.ex., to move or rotate something. This offsets cognitive load, allowing them to forget about the interface itself and focus on the task they’re performing. If objects are floating in mid air, a use of a simple guide metaphor can be useful for interaction, again relating back to having the actual sensation of touch during the interaction.

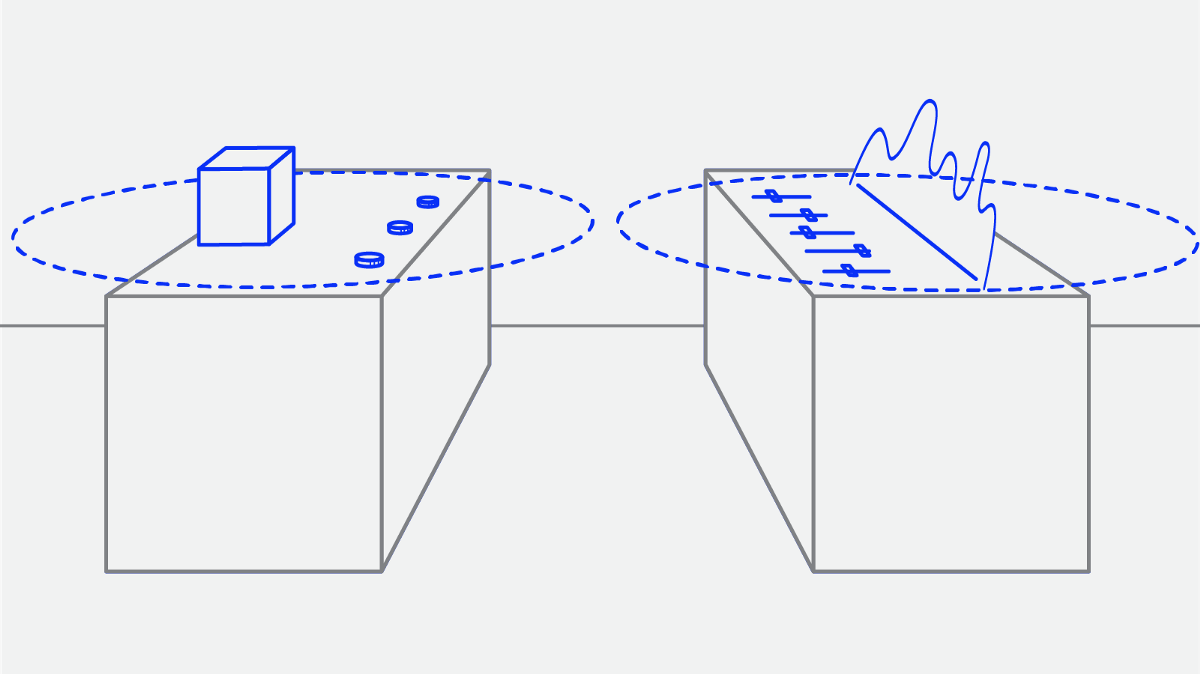

Use spatial anchors to expand interfaces beyond the desktop

With MR headsets like Hololens, we have the ability to position objects and interface elements in the space around us, not just on a table right in front of us. This expands our useful interface area beyond the desktop. It provides us with the ability to create focused interface areas that we can physically move through , much like how we do things in a real-world workshop. As a by product this can also simplify individual interfaces and help with some of the detection resolution issues.

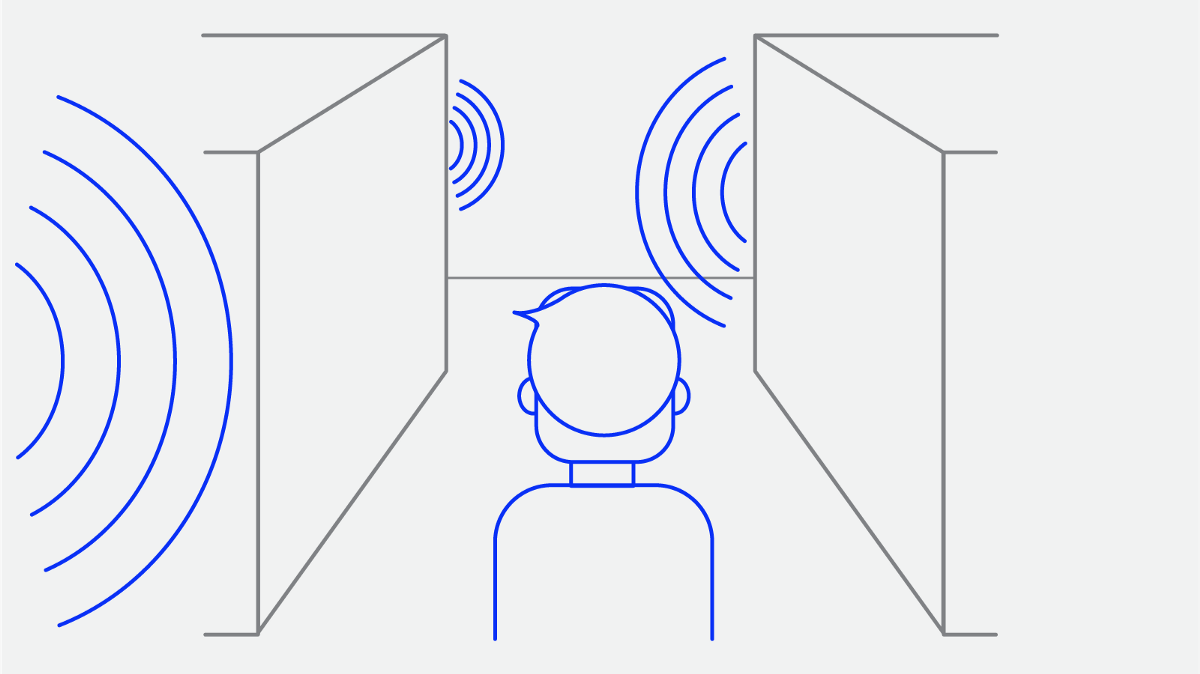

Utilize 3D sound to enhance the experience with directional cues

Spatial sound can provide us with subtle cues about status and the position of objects around us?—?from clicks that provide audio feedback when we hit interface buttons to gentle notifications from far away objects. Sound works on a more unconscious level than vision. While we consciously direct our vision towards objects, sound emanates from its emitter towards us. This means that we cannot only notify a user of an object’s status (f.ex. train platform or an oven left on), but also its location and direct them towards it. Together with the rest of the sensors and voice control in the headset, the combination allows for some very advanced but naturally intuitive scenarios: f.ex. 3D sound notification sounds from the direction of an object, the user turns towards it (narrowing the context of interest), they issue a voice command now specific to that object, AI’s load is decreased, and the machine responds accordingly.

Examples created along the way

While prototyping, we’ve built a set of examples that touch upon the various ingredients (above) and illustrated them in practice. We’ll be publishing the code as open source later this month on Github so you can play with the examples in action.

Rotating Buddha demonstrating how rotation mapped to a tea-cup makes for a more tangible interface

Robot arm showcasing direct manipulation with a virtual object in play

Morning briefing illustrating how different functional spaces, such as entertainment and planning interconnect

Finding your keys (right) showing 3D sound combined with visual cues in use to help you navigate space and find what you’ve lost

Amazing things to come

Mixed Reality shows great potential in applications that mix the real with the virtual?—?not complete immersion, like in VR simulations or games, but rather augmentation or enhancement.

The ability to augment real-world objects with contextual information unlocks a wide range of opportunities that will make us feel like super humans?—?from enabling us to become expert navigators to upscaling manual workforces to augmented remote assistance in the field. Combined with the ability to create interactive three-dimensional virtual prototypes that we can collaborate around in real time without losing the human connection, the new medium of MR could very well be the next big interaction paradigm.

Top Insights

Innovators and Laggards: The Technology Landscape of 2019

Digital TransformationPerspectiveAutomotiveCommunicationsConsumer and RetailFinancial ServicesHealthcareManufacturing and IndustrialMediaTechnologyTop Authors

Blog Categories

Let’s Work Together

Related Content

GenAI in Action: Lessons from Industry Leaders on Driving Real ROI

Generative AI (GenAI) has the potential to transform industries, yet many companies are still struggling to move from experimentation to real business impact. The hype is everywhere, but the real challenge is figuring out where AI can drive measurable value—and how to overcome the barriers to adoption. In the latest episode of Hitachi ActionTalks: GenAI, … Continue reading Interacting with the Virtual — A Mix of Realities →

Learn more

Unlock the Power of the Intelligent Healthcare Ecosystem

Welcome to the future of healthcare The healthcare industry is on the cusp of a revolutionary transformation. As we move beyond digital connectivity and data integration, the next decade will be defined by the emergence of the Intelligent Healthcare Ecosystem. This is more than a technological shift—it's a fundamental change in how we deliver, experience, … Continue reading Interacting with the Virtual — A Mix of Realities →

Learn more

Share this page:

-

-

-

-

URL copied!