- Services

Technology Capabilities

Technology Capabilities- Product Strategy & Experience DesignDefine software-driven value chains, create purposeful interactions, and develop new segments and offerings

- Digital Business TransformationAdvance your digital transformation journey.

- Intelligence EngineeringLeverage data and AI to transform products, operations, and outcomes.

- Software Product EngineeringCreate high-value products faster with AI-powered and human-driven engineering.

- Technology ModernizationTackle technology modernization with approaches that reduce risk and maximize impact.

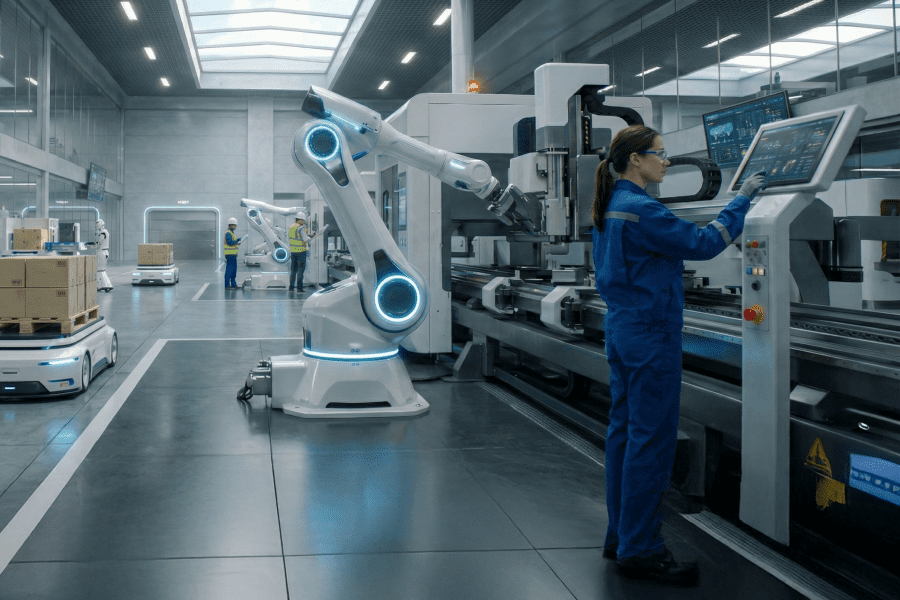

- Embedded Engineering & IT/OT TransformationDevelop embedded software and hardware. Build IoT and IT/OT solutions.

- Industries

- GlobalLogic VelocityAI

- Insights

BlogsFebruary 12, 2026Ihor TolkushynThe 6G Revolution: Architecting the Internet of Everything

As 5G continues its global rollout, the telecommunications industry is already looking ...

Case StudiesGlobalLogic

Case StudiesGlobalLogicHow Agentic AI Accelerated Investment Decisions for a Global Firm

For one of the world’s largest investment firms, decisions move at the speed of insight.

- About

RecognitionsGlobalLogicFebruary 2, 2026GlobalLogic Achieves Platinum Partner Status with Boomi

GlobalLogic is now a Boomi Platinum Partner! See how our global scale, Boomi Lab expert...

Press ReleaseGlobalLogicJanuary 29, 2026

Press ReleaseGlobalLogicJanuary 29, 2026Hitachi Announces Plans to Integrate GlobalLogic and Hitachi ...

Hitachi, Ltd. has announced its intent to integrate its U.S. subsidiaries, GlobalLogic ...

- Careers

BlogsBlogsBlogsGlobalLogic18 December 2025Physical AI: Bringing Intelligence to the Edge of Action

At GlobalLogic, we’re building systems that don’t just ...

BlogsBlogsBlogsBlogsBlogsBlogsBlogsLoading...

How can I help you?

How can I help you?

Hi there — how can I assist you today?

Explore our services, industries, career opportunities, and more.

Powered by Gemini. GenAI responses may be inaccurate—please verify. By using this chat, you agree to GlobalLogic's Terms of Service and Privacy Policy.